import pandas as pd

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

from pathlib import Path

import pingouin as pg

from lets_plot import *

LetsPlot.setup_html(no_js=True)

plt.style.use(

"https://raw.githubusercontent.com/aeturrell/core_python/main/plot_style.txt"

)practice_1

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | J-D | D-N | DJF | MAM | JJA | SON | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1880 | -0.39 | -0.53 | -0.23 | -0.30 | -0.05 | -0.18 | -0.22 | -0.25 | -0.24 | -0.30 | -0.43 | -0.42 | -0.30 | NaN | NaN | -0.20 | -0.22 | -0.32 |

| 1 | 1881 | -0.31 | -0.25 | -0.06 | -0.02 | 0.05 | -0.34 | 0.09 | -0.06 | -0.28 | -0.44 | -0.37 | -0.24 | -0.19 | -0.20 | -0.33 | -0.01 | -0.10 | -0.37 |

| 2 | 1882 | 0.25 | 0.21 | 0.02 | -0.30 | -0.23 | -0.29 | -0.28 | -0.15 | -0.25 | -0.52 | -0.33 | -0.68 | -0.21 | -0.17 | 0.08 | -0.17 | -0.24 | -0.37 |

| 3 | 1883 | -0.57 | -0.66 | -0.15 | -0.30 | -0.26 | -0.12 | -0.06 | -0.23 | -0.34 | -0.17 | -0.44 | -0.15 | -0.29 | -0.33 | -0.64 | -0.23 | -0.14 | -0.32 |

| 4 | 1884 | -0.16 | -0.11 | -0.64 | -0.59 | -0.36 | -0.41 | -0.41 | -0.52 | -0.45 | -0.44 | -0.58 | -0.47 | -0.43 | -0.40 | -0.14 | -0.53 | -0.45 | -0.49 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 140 | 2020 | 1.59 | 1.69 | 1.66 | 1.39 | 1.27 | 1.14 | 1.10 | 1.12 | 1.19 | 1.21 | 1.58 | 1.18 | 1.34 | 1.36 | 1.56 | 1.44 | 1.12 | 1.33 |

| 141 | 2021 | 1.25 | 0.96 | 1.20 | 1.13 | 1.05 | 1.21 | 1.07 | 1.02 | 1.05 | 1.29 | 1.29 | 1.17 | 1.14 | 1.14 | 1.13 | 1.13 | 1.10 | 1.21 |

| 142 | 2022 | 1.24 | 1.16 | 1.41 | 1.09 | 1.02 | 1.13 | 1.06 | 1.17 | 1.15 | 1.31 | 1.09 | 1.06 | 1.16 | 1.17 | 1.19 | 1.17 | 1.12 | 1.19 |

| 143 | 2023 | 1.29 | 1.29 | 1.64 | 1.01 | 1.13 | 1.19 | 1.44 | 1.57 | 1.67 | 1.88 | 1.97 | 1.85 | 1.50 | 1.43 | 1.22 | 1.26 | 1.40 | 1.84 |

| 144 | 2024 | 1.67 | 1.93 | 1.77 | 1.79 | 1.44 | 1.54 | 1.42 | 1.42 | 1.58 | 1.72 | 1.90 | NaN | NaN | 1.67 | 1.82 | 1.67 | 1.46 | 1.73 |

145 rows × 19 columns

| Year | Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | J-D | D-N | DJF | MAM | JJA | SON | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1880 | -0.39 | -0.53 | -0.23 | -0.30 | -0.05 | -0.18 | -0.22 | -0.25 | -0.24 | -0.30 | -0.43 | -0.42 | -0.30 | NaN | NaN | -0.20 | -0.22 | -0.32 |

| 1 | 1881 | -0.31 | -0.25 | -0.06 | -0.02 | 0.05 | -0.34 | 0.09 | -0.06 | -0.28 | -0.44 | -0.37 | -0.24 | -0.19 | -0.20 | -0.33 | -0.01 | -0.10 | -0.37 |

| 2 | 1882 | 0.25 | 0.21 | 0.02 | -0.30 | -0.23 | -0.29 | -0.28 | -0.15 | -0.25 | -0.52 | -0.33 | -0.68 | -0.21 | -0.17 | 0.08 | -0.17 | -0.24 | -0.37 |

| 3 | 1883 | -0.57 | -0.66 | -0.15 | -0.30 | -0.26 | -0.12 | -0.06 | -0.23 | -0.34 | -0.17 | -0.44 | -0.15 | -0.29 | -0.33 | -0.64 | -0.23 | -0.14 | -0.32 |

| 4 | 1884 | -0.16 | -0.11 | -0.64 | -0.59 | -0.36 | -0.41 | -0.41 | -0.52 | -0.45 | -0.44 | -0.58 | -0.47 | -0.43 | -0.40 | -0.14 | -0.53 | -0.45 | -0.49 |

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 145 entries, 0 to 144

Data columns (total 19 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Year 145 non-null int64

1 Jan 145 non-null float64

2 Feb 145 non-null float64

3 Mar 145 non-null float64

4 Apr 145 non-null float64

5 May 145 non-null float64

6 Jun 145 non-null float64

7 Jul 145 non-null float64

8 Aug 145 non-null float64

9 Sep 145 non-null float64

10 Oct 145 non-null float64

11 Nov 145 non-null float64

12 Dec 144 non-null float64

13 J-D 144 non-null float64

14 D-N 144 non-null float64

15 DJF 144 non-null float64

16 MAM 145 non-null float64

17 JJA 145 non-null float64

18 SON 145 non-null float64

dtypes: float64(18), int64(1)

memory usage: 21.7 KB| Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | J-D | D-N | DJF | MAM | JJA | SON | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Year | ||||||||||||||||||

| 1880 | -0.39 | -0.53 | -0.23 | -0.30 | -0.05 | -0.18 | -0.22 | -0.25 | -0.24 | -0.30 | -0.43 | -0.42 | -0.30 | NaN | NaN | -0.20 | -0.22 | -0.32 |

| 1881 | -0.31 | -0.25 | -0.06 | -0.02 | 0.05 | -0.34 | 0.09 | -0.06 | -0.28 | -0.44 | -0.37 | -0.24 | -0.19 | -0.20 | -0.33 | -0.01 | -0.10 | -0.37 |

| 1882 | 0.25 | 0.21 | 0.02 | -0.30 | -0.23 | -0.29 | -0.28 | -0.15 | -0.25 | -0.52 | -0.33 | -0.68 | -0.21 | -0.17 | 0.08 | -0.17 | -0.24 | -0.37 |

| 1883 | -0.57 | -0.66 | -0.15 | -0.30 | -0.26 | -0.12 | -0.06 | -0.23 | -0.34 | -0.17 | -0.44 | -0.15 | -0.29 | -0.33 | -0.64 | -0.23 | -0.14 | -0.32 |

| 1884 | -0.16 | -0.11 | -0.64 | -0.59 | -0.36 | -0.41 | -0.41 | -0.52 | -0.45 | -0.44 | -0.58 | -0.47 | -0.43 | -0.40 | -0.14 | -0.53 | -0.45 | -0.49 |

| Jan | Feb | Mar | Apr | May | Jun | Jul | Aug | Sep | Oct | Nov | Dec | J-D | D-N | DJF | MAM | JJA | SON | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Year | ||||||||||||||||||

| 2020 | 1.59 | 1.69 | 1.66 | 1.39 | 1.27 | 1.14 | 1.10 | 1.12 | 1.19 | 1.21 | 1.58 | 1.18 | 1.34 | 1.36 | 1.56 | 1.44 | 1.12 | 1.33 |

| 2021 | 1.25 | 0.96 | 1.20 | 1.13 | 1.05 | 1.21 | 1.07 | 1.02 | 1.05 | 1.29 | 1.29 | 1.17 | 1.14 | 1.14 | 1.13 | 1.13 | 1.10 | 1.21 |

| 2022 | 1.24 | 1.16 | 1.41 | 1.09 | 1.02 | 1.13 | 1.06 | 1.17 | 1.15 | 1.31 | 1.09 | 1.06 | 1.16 | 1.17 | 1.19 | 1.17 | 1.12 | 1.19 |

| 2023 | 1.29 | 1.29 | 1.64 | 1.01 | 1.13 | 1.19 | 1.44 | 1.57 | 1.67 | 1.88 | 1.97 | 1.85 | 1.50 | 1.43 | 1.22 | 1.26 | 1.40 | 1.84 |

| 2024 | 1.67 | 1.93 | 1.77 | 1.79 | 1.44 | 1.54 | 1.42 | 1.42 | 1.58 | 1.72 | 1.90 | NaN | NaN | 1.67 | 1.82 | 1.67 | 1.46 | 1.73 |

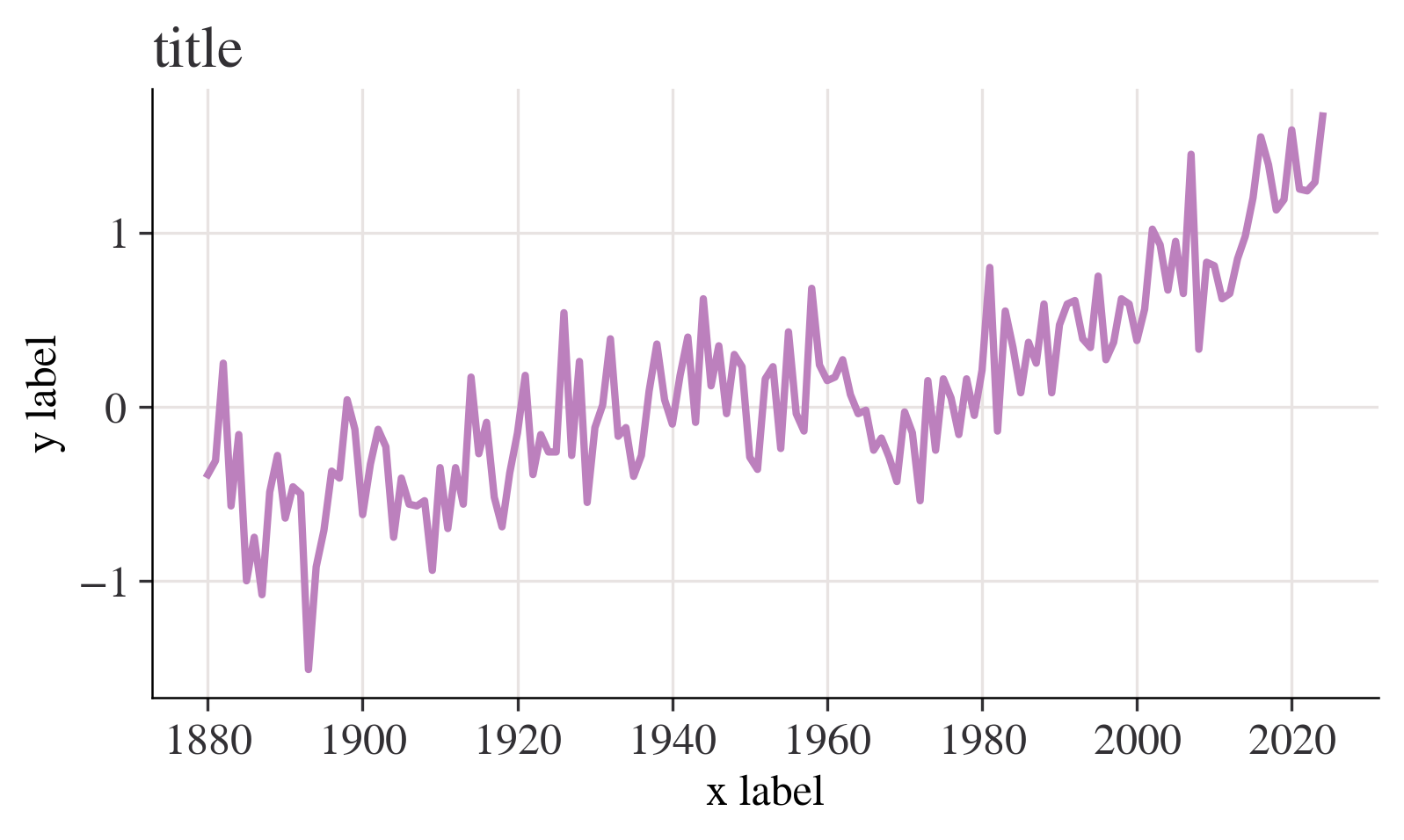

fig, ax = plt.subplots()

df["Jan"].plot(ax=ax)

ax.set_ylabel("y label")

ax.set_xlabel("x label")

ax.set_title("title")

plt.show()

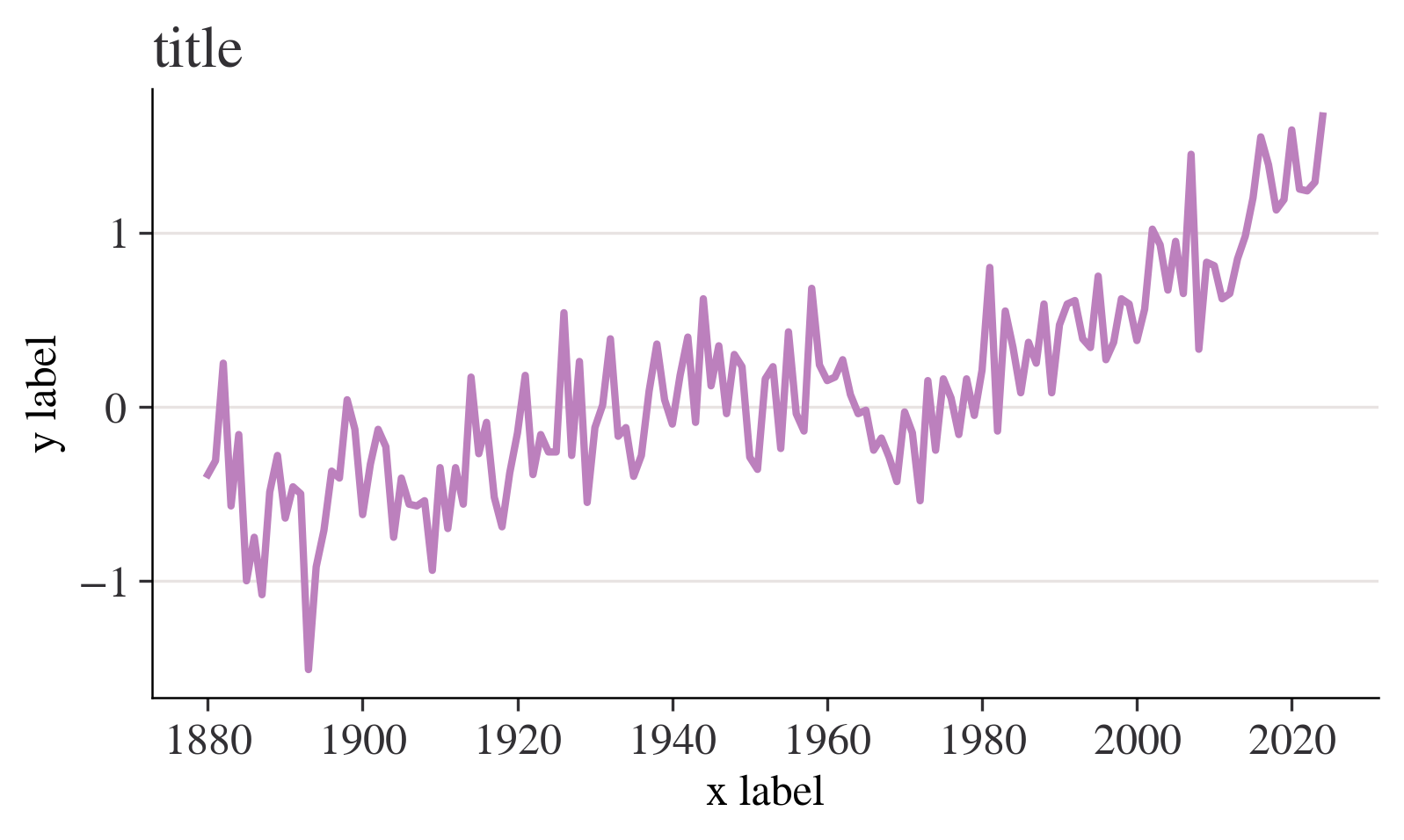

fig, ax = plt.subplots()

ax.plot(df.index, df["Jan"])

ax.set_ylabel("y label")

ax.set_xlabel("x label")

ax.set_title("title")

plt.show()

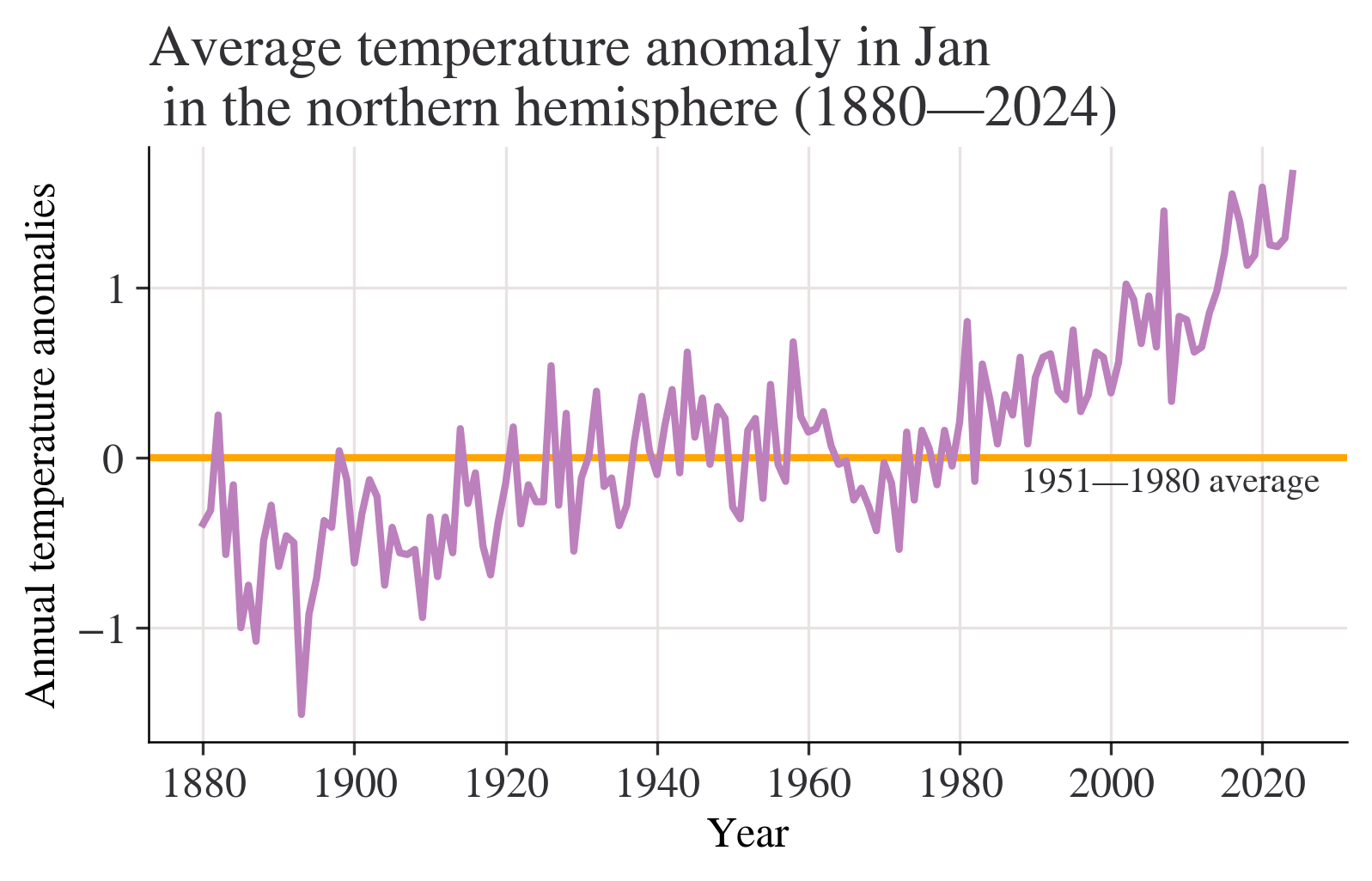

month = "Jan"

fig, ax = plt.subplots()

ax.axhline(0, color="orange")

ax.annotate("1951—1980 average", xy=(0.66, -0.2), xycoords=("figure fraction", "data"))

df[month].plot(ax=ax)

ax.set_title(

f"Average temperature anomaly in {month} \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

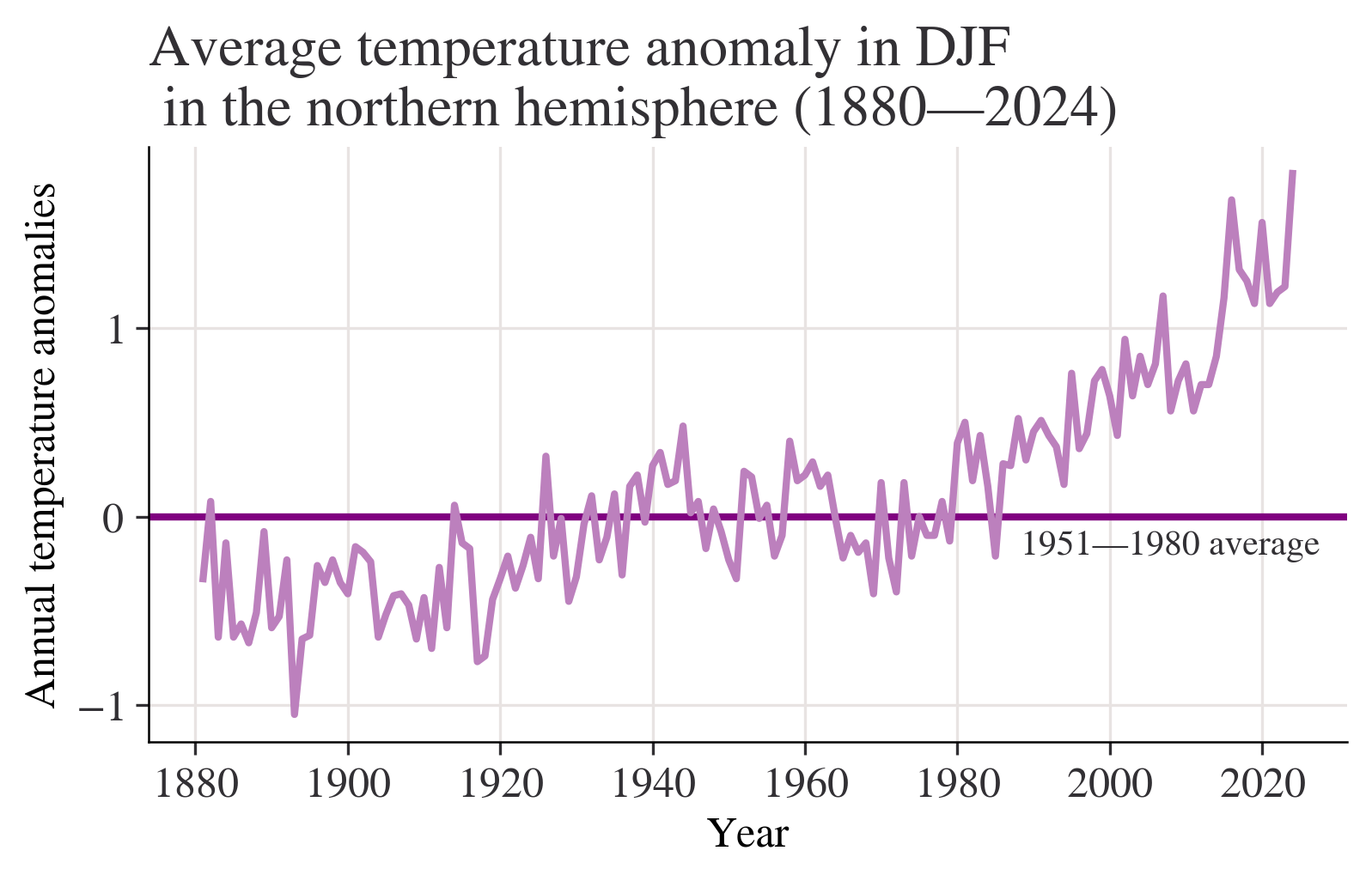

season = "DJF"

fig, ax = plt.subplots()

ax.axhline(0, color="purple")

ax.annotate("1951—1980 average", xy=(0.66, -0.2), xycoords=("figure fraction", "data"))

df[season].plot(ax=ax)

ax.set_title(

f"Average temperature anomaly in {season} \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

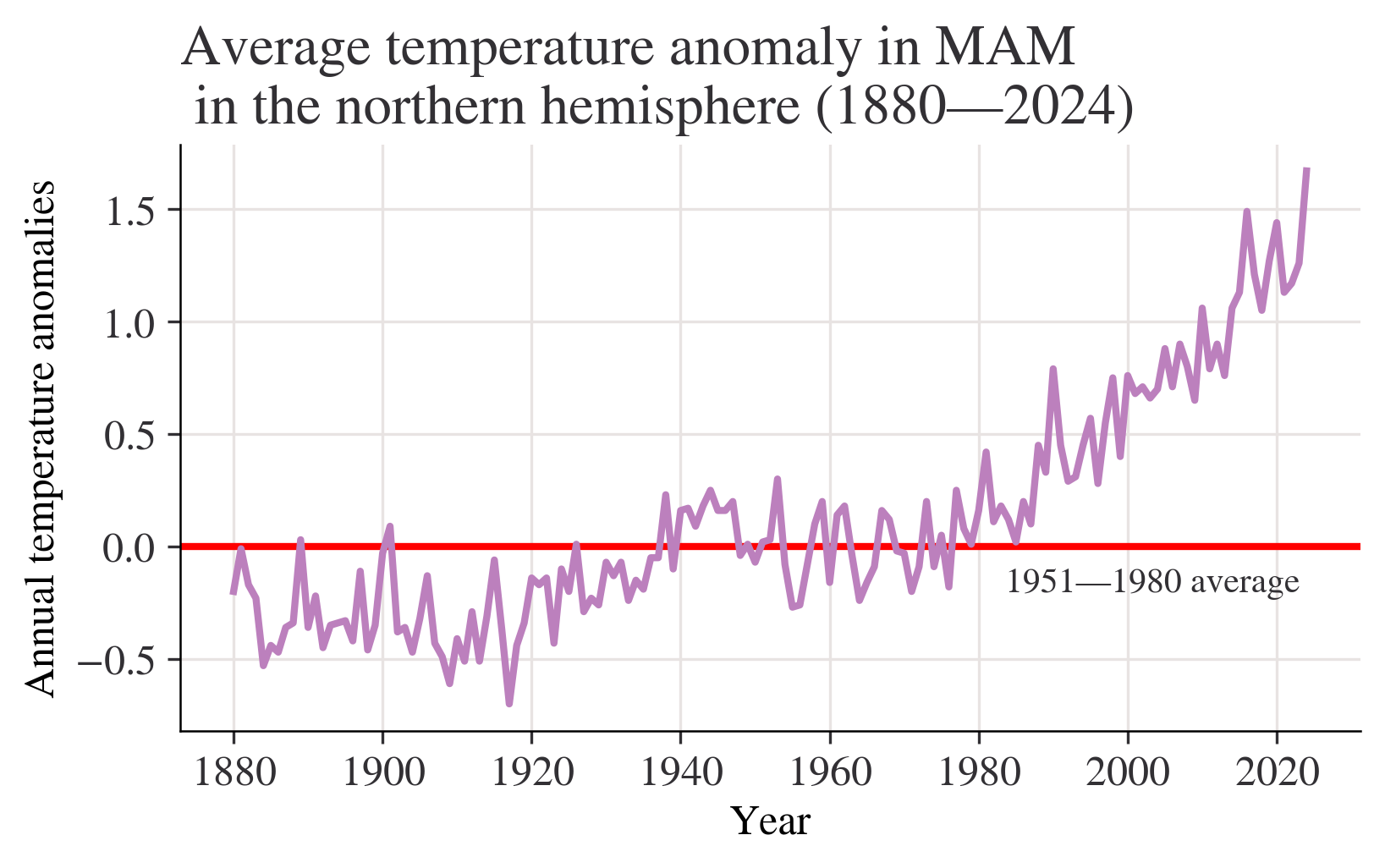

season = "MAM"

fig, ax = plt.subplots()

ax.axhline(0, color="red")

ax.annotate("1951—1980 average", xy=(0.66, -0.2), xycoords=("figure fraction", "data"))

df[season].plot(ax=ax)

ax.set_title(

f"Average temperature anomaly in {season} \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

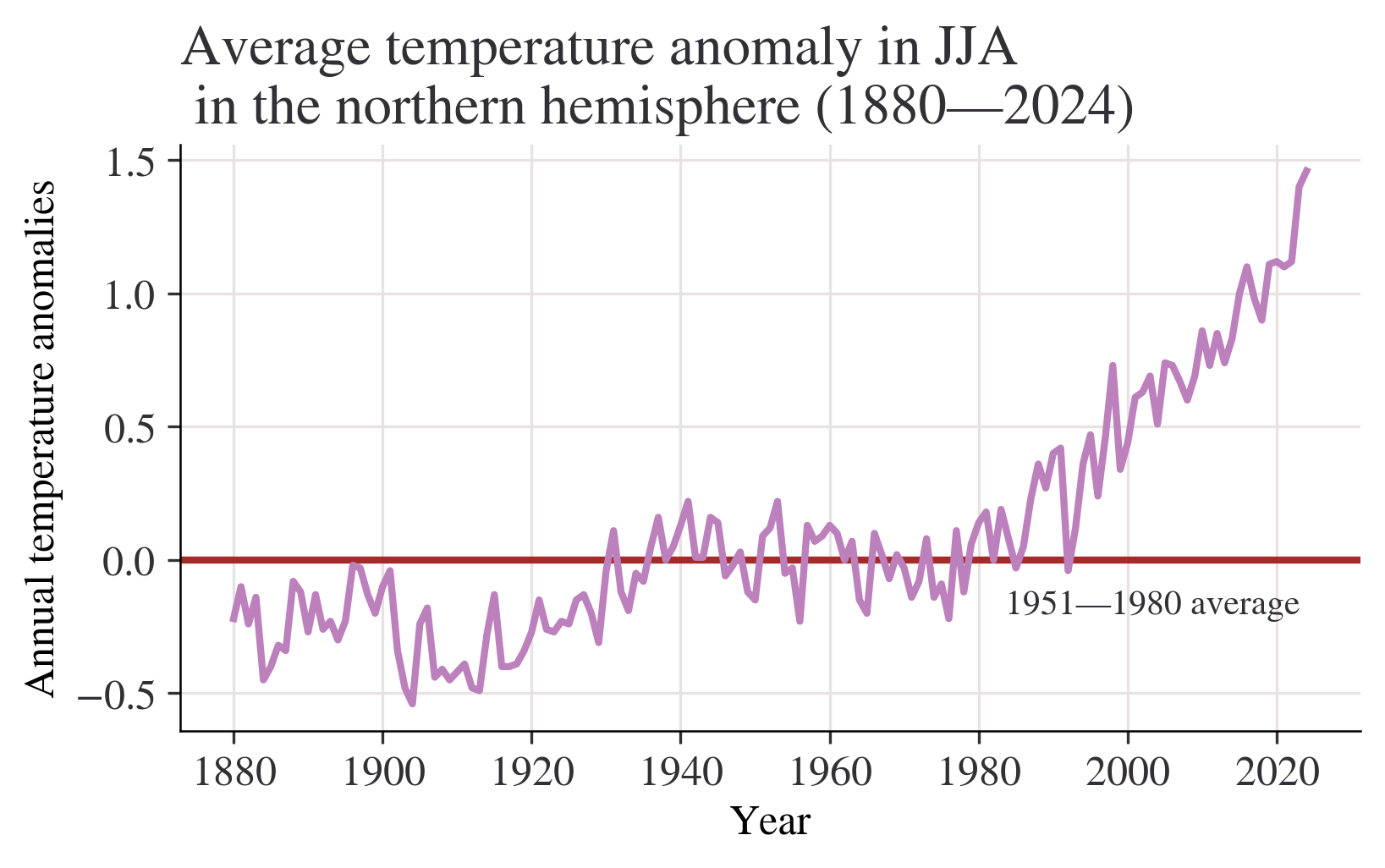

season = "JJA"

fig, ax = plt.subplots()

ax.axhline(0, color="brown")

ax.annotate("1951—1980 average", xy=(0.66, -0.2), xycoords=("figure fraction", "data"))

df[season].plot(ax=ax)

ax.set_title(

f"Average temperature anomaly in {season} \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

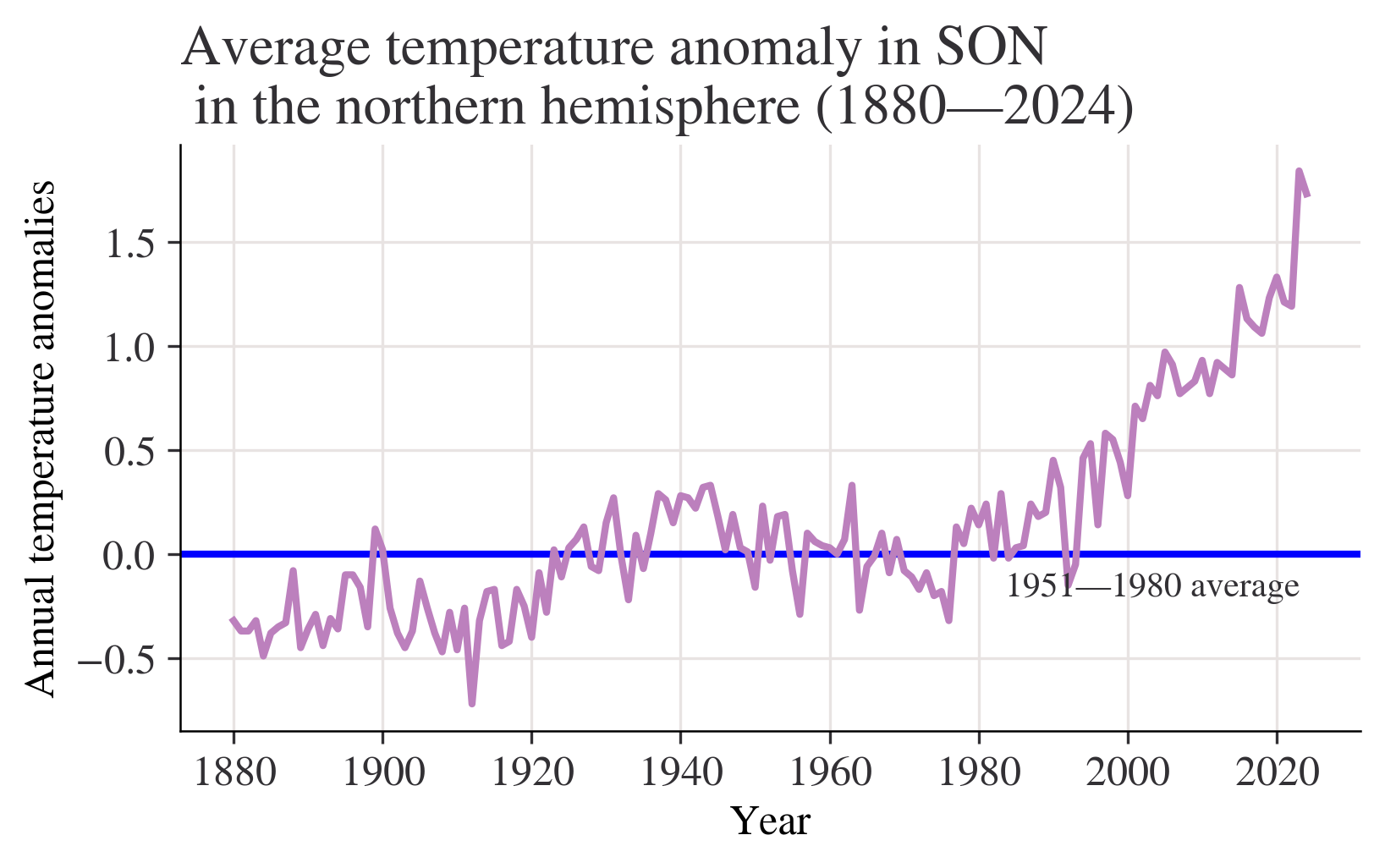

season = "SON"

fig, ax = plt.subplots()

ax.axhline(0, color="blue")

ax.annotate("1951—1980 average", xy=(0.66, -0.2), xycoords=("figure fraction", "data"))

df[season].plot(ax=ax)

ax.set_title(

f"Average temperature anomaly in {season} \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

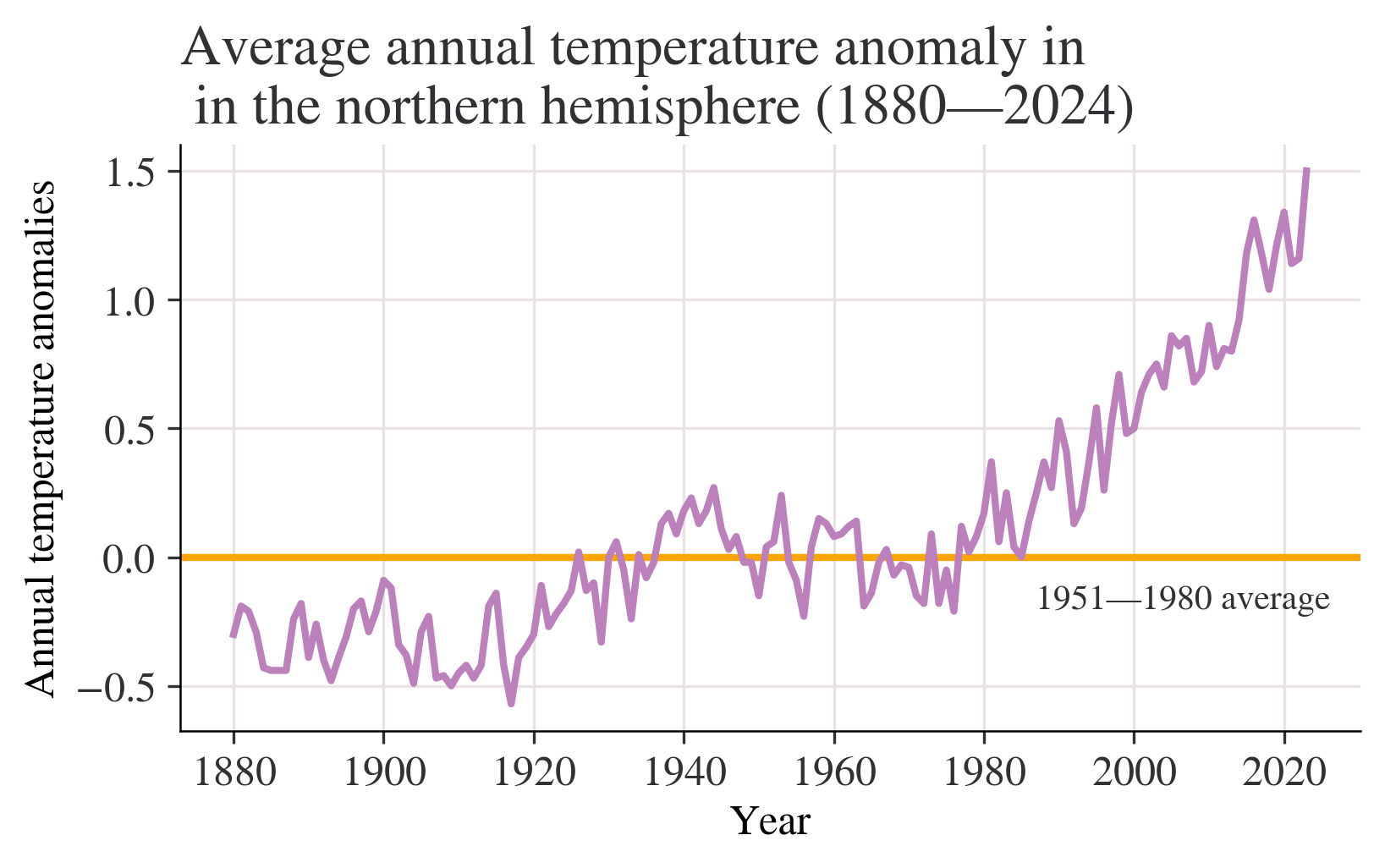

Question:What do your charts from Questions 2 to 4(a) suggest about the relationship between temperature and time? Answer:Chart from question 2 shows that most of temperature anomalies are between -1 - 1,the year with the lowest temperature anomaly is between 1880-1900,the highest is in 2020.Although the data is floating up or down,the overall trend is slowly upward. We can get a more clearly Chart from question 3 with titles of x label and y label.Besides,a horizontal line is added to make the chart easier to read.We can easily see how these statistics float with the average line marked. Chart from question 4(a) shows that the overall trend is slowly upward.Data from 1880 to 2000 is rising slowly,but we can see that after 2000,(2000-2020) the chart shows a trend which is soaring dramatically without obvious decrease.

month = "J-D"

fig, ax = plt.subplots()

ax.axhline(0, color="orange")

ax.annotate("1951—1980 average", xy=(0.68, -0.2), xycoords=("figure fraction", "data"))

df[month].plot(ax=ax)

ax.set_title(

f"Average annual temperature anomaly in \n in the northern hemisphere (1880—{df.index.max()})"

)

ax.set_ylabel("Annual temperature anomalies");

Question of 6(a):Discuss the similarities and differences between the charts. answer of 6(a):the variables of horizontal axes are both years,the variables of vertical axes are both temperatures,but the specific statistics are different,the gaps between two numbers are different,too.The lines are not the same.The chart from question 4 shows a slowly rising trend.However,the chart from Figure 1.5 shows no obvious rising. Question of 6(b):Looking at the behaviour of temperature over time from 1000 to 1900 in Figure 1.4, are the observed patterns in your chart unusual? answer of 6(b):I thought the question may be wrong?It should be Figure 1.5? I will answer the question based on Figure 1.5. These data fluctuate from -0.6 - 0 from 1000 to 1900.The overall trend are basically flat without sharp ascent or descent.It may not be unusual. Question of 6(c):Based on your answers to Questions 4 and 5, do you think the government should be concerned about climate change? answer of 6(c):I think the government should be concerned about climate change with no doubt.The statistics from Questions 4 and 5 shows the rising temperature these years.We can even see an obvious sharp rise after 2020,which shows the temperature of North Hemisphere is higer and higher because of the global warming,I think.The problem of the environment should be noticed and stressed.

df["Period"] = pd.cut(

df.index,

bins=[1921, 1950, 1980, 2010],

labels=["1921—1950", "1951—1980", "1981—2010"],

ordered=True,

)

df["Period"].tail(20)Year

2005 1981—2010

2006 1981—2010

2007 1981—2010

2008 1981—2010

2009 1981—2010

2010 1981—2010

2011 NaN

2012 NaN

2013 NaN

2014 NaN

2015 NaN

2016 NaN

2017 NaN

2018 NaN

2019 NaN

2020 NaN

2021 NaN

2022 NaN

2023 NaN

2024 NaN

Name: Period, dtype: category

Categories (3, object): ['1921—1950' < '1951—1980' < '1981—2010']Year

1880 Jun -0.18

Jul -0.22

Aug -0.25

1881 Jun -0.34

Jul 0.09

dtype: float64fig, axes = plt.subplots(ncols=3, figsize=(9, 4), sharex=True, sharey=True)

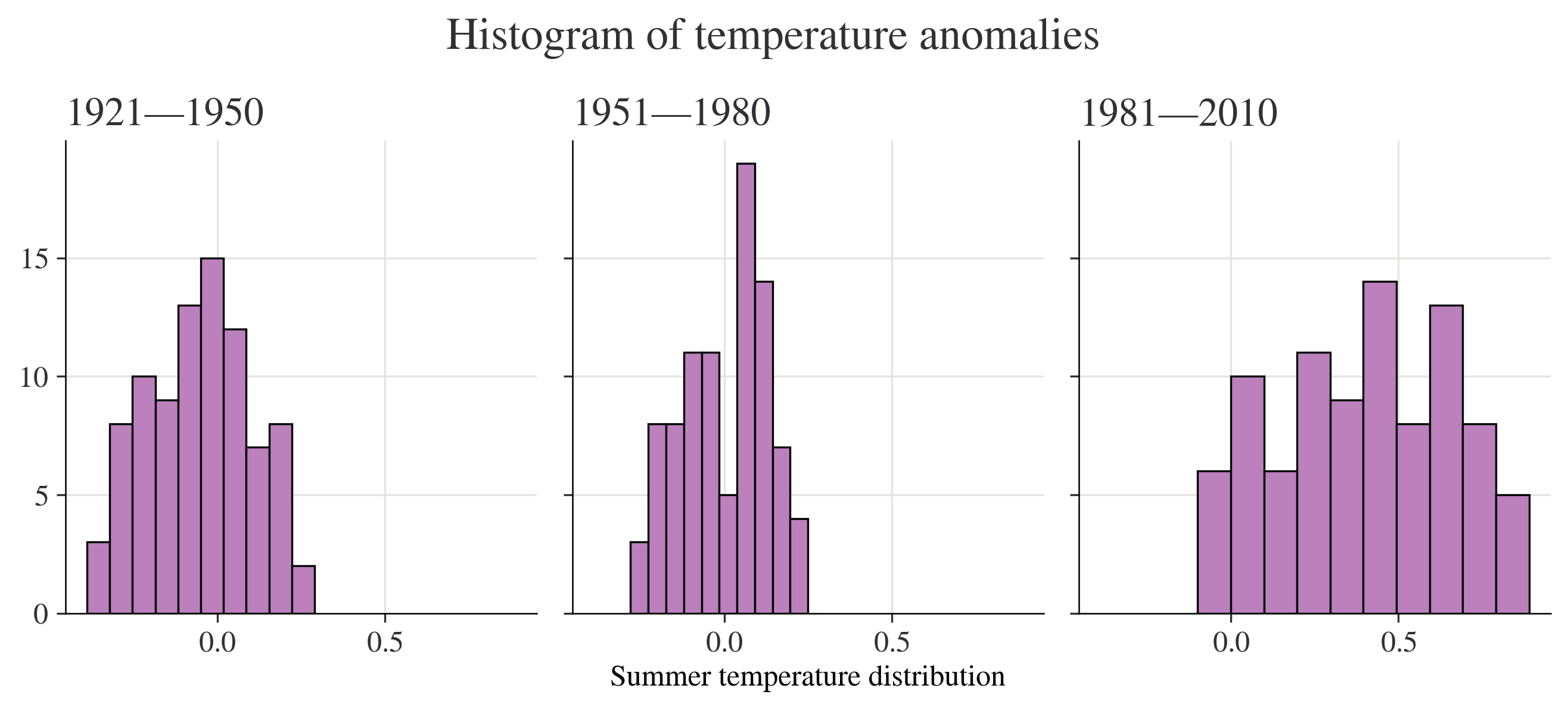

for ax, period in zip(axes, df["Period"].dropna().unique()):

df.loc[df["Period"] == period, list_of_months].stack().hist(ax=ax)

ax.set_title(period)

plt.suptitle("Histogram of temperature anomalies")

axes[1].set_xlabel("Summer temperature distribution")

plt.tight_layout();

Question of 1.4 2(b):Using your charts, describe the similarities and differences (if any) between the distributions of temperature anomalies in 1951–1980 and 1981–2010. answer of 1.4 2(b):most of the count of the number of anomalies that fall in the interval of these two charts are between 0-15.The bin width is also different.Bins of 1951-1980 differs from -0.3 - 0.25.However,Bins of 1981-2010 differs from -0.1 - 0.9.The most frequently encountered temperature interval of 1951-1980 is 20,which is far higher than that of 1981-2010.

# Create a variable that has years 1951 to 1980, and months Jan to Dec (inclusive)

temp_all_months = df.loc[(df.index >= 1951) & (df.index <= 1980), "Jan":"Dec"]

# Put all the data in stacked format and give the new columns sensible names

temp_all_months = (

temp_all_months.stack()

.reset_index()

.rename(columns={"level_1": "month", 0: "values"})

)

# Take a look at this data:

temp_all_months| Year | month | values | |

|---|---|---|---|

| 0 | 1951 | Jan | -0.36 |

| 1 | 1951 | Feb | -0.51 |

| 2 | 1951 | Mar | -0.18 |

| 3 | 1951 | Apr | 0.06 |

| 4 | 1951 | May | 0.17 |

| ... | ... | ... | ... |

| 355 | 1980 | Aug | 0.10 |

| 356 | 1980 | Sep | 0.10 |

| 357 | 1980 | Oct | 0.12 |

| 358 | 1980 | Nov | 0.21 |

| 359 | 1980 | Dec | 0.09 |

360 rows × 3 columns

quantiles = [0.3, 0.7]

list_of_percentiles = np.quantile(temp_all_months["values"], q=quantiles)

print(f"The cold threshold of {quantiles[0]*100}% is {list_of_percentiles[0]}")

print(f"The hot threshold of {quantiles[1]*100}% is {list_of_percentiles[1]}")The cold threshold of 30.0% is -0.1

The hot threshold of 70.0% is 0.1Question of 1.5 4:Does your answer suggest that we are experiencing hotter weather more frequently in this timeframe? Answer of 1.5 4:According to’In decile terms, temperatures in the 1st to 3rd deciles are ‘cold’ and temperatures in the 7th to 10th deciles or above are ‘hot’’,obviously,we are experiencing hotter weather more frequently in this timeframe.

# Create a variable that has years 1981 to 2010, and months Jan to Dec (inclusive)

temp_all_months = df.loc[(df.index >= 1981) & (df.index <= 2010), "Jan":"Dec"]

# Put all the data in stacked format and give the new columns sensible names

temp_all_months = (

temp_all_months.stack()

.reset_index()

.rename(columns={"level_1": "month", 0: "values"})

)

# Take a look at the start of this data data:

temp_all_months.head()| Year | month | values | |

|---|---|---|---|

| 0 | 1981 | Jan | 0.80 |

| 1 | 1981 | Feb | 0.62 |

| 2 | 1981 | Mar | 0.68 |

| 3 | 1981 | Apr | 0.39 |

| 4 | 1981 | May | 0.18 |

entries_less_than_q30 = temp_all_months["values"] < list_of_percentiles[0]

proportion_under_q30 = entries_less_than_q30.mean()

print(

f"The proportion under {list_of_percentiles[0]} is {proportion_under_q30*100:.2f}%"

)The proportion under -0.1 is 1.94%proportion_over_q70 = (temp_all_months["values"] > list_of_percentiles[1]).mean()

print(f"The proportion over {list_of_percentiles[1]} is {proportion_over_q70*100:.2f}%")The proportion over 0.1 is 84.72%temp_all_months = (

df.loc[:, "DJF":"SON"]

.stack()

.reset_index()

.rename(columns={"level_1": "Season", 0: "Values"})

)

temp_all_months["Period"] = pd.cut(

temp_all_months["Year"],

bins=[1921, 1950, 1980, 2010],

labels=["1921—1950", "1951—1980", "1981—2010"],

ordered=True,

)

# Take a look at a cut of the data using `.iloc`, which provides position

temp_all_months.iloc[-135:-125]| Year | Season | Values | Period | |

|---|---|---|---|---|

| 444 | 1991 | MAM | 0.45 | 1981—2010 |

| 445 | 1991 | JJA | 0.42 | 1981—2010 |

| 446 | 1991 | SON | 0.32 | 1981—2010 |

| 447 | 1992 | DJF | 0.43 | 1981—2010 |

| 448 | 1992 | MAM | 0.29 | 1981—2010 |

| 449 | 1992 | JJA | -0.04 | 1981—2010 |

| 450 | 1992 | SON | -0.15 | 1981—2010 |

| 451 | 1993 | DJF | 0.37 | 1981—2010 |

| 452 | 1993 | MAM | 0.31 | 1981—2010 |

| 453 | 1993 | JJA | 0.12 | 1981—2010 |

grp_mean_var = temp_all_months.groupby(["Season", "Period"])["Values"].agg(

[np.mean, np.var]

)

grp_mean_var| mean | var | ||

|---|---|---|---|

| Season | Period | ||

| DJF | 1921—1950 | -0.025862 | 0.057489 |

| 1951—1980 | -0.002000 | 0.050548 | |

| 1981—2010 | 0.523333 | 0.078975 | |

| JJA | 1921—1950 | -0.053448 | 0.021423 |

| 1951—1980 | 0.000000 | 0.014697 | |

| 1981—2010 | 0.400000 | 0.067524 | |

| MAM | 1921—1950 | -0.041034 | 0.031302 |

| 1951—1980 | 0.000333 | 0.025245 | |

| 1981—2010 | 0.509333 | 0.075737 | |

| SON | 1921—1950 | 0.083448 | 0.027473 |

| 1951—1980 | -0.001333 | 0.026205 | |

| 1981—2010 | 0.429000 | 0.111127 |

min_year = 1880

(

ggplot(temp_all_months, aes(x="Year", y="Values", color="Season"))

+ geom_abline(slope=0, color="black", size=1)

+ geom_line(size=1)

+ labs(

title=f"Average annual temperature anomaly in \n in the northern hemisphere ({min_year}—{temp_all_months['Year'].max()})",

y="Annual temperature anomalies",

)

+ scale_x_continuous(format="d")

+ geom_text(

x=min_year, y=0.1, label="1951—1980 average", hjust="left", color="black"

)

)Question of 1.6 5(b):For each season, compare the variances in different periods, and explain whether or not temperature appears to be more variable in later periods answer of 1.6 5(b):We can see that variances in different periods differs.But the difference between 1921-1950 and 1950-1980 is very small.However,the variance in 1981-2010 is upward sharply.So we can say temperature appears to be more variable in later periods Question of 1.7 6:whether temperature appears to be more variable over time. Would you advise the government to spend more money on mitigating the effects of extreme weather events? Answer of 1.7 6:Of course temperature appears to be more variable over time.,especially in 1981-2010,temperature is rising obviously,which shows the environment is getting worse and worse.I strongly recommend the government to spend more money on mitigating the effects of extreme weather events,otherwise,we human beings have to face the bad result.

| Year | Month | Monthly average | Interpolated | Trend | |

|---|---|---|---|---|---|

| 0 | 1958 | 3 | 315.71 | 315.71 | 314.62 |

| 1 | 1958 | 4 | 317.45 | 317.45 | 315.29 |

| 2 | 1958 | 5 | 317.50 | 317.50 | 314.71 |

| 3 | 1958 | 6 | -99.99 | 317.10 | 314.85 |

| 4 | 1958 | 7 | 315.86 | 315.86 | 314.98 |

| Year | Month | Monthly average | Interpolated | Trend | |

|---|---|---|---|---|---|

| 3 | 1958 | 6 | -99.99 | 317.10 | 314.85 |

| 15 | 1959 | 6 | 318.15 | 318.15 | 315.92 |

| 27 | 1960 | 6 | 319.59 | 319.59 | 317.36 |

| 39 | 1961 | 6 | 319.77 | 319.77 | 317.48 |

| 51 | 1962 | 6 | 320.55 | 320.55 | 318.27 |

| Year | Jun | Trend | |

|---|---|---|---|

| 0 | 1958 | 0.05 | 314.85 |

| 1 | 1959 | 0.14 | 315.92 |

| 2 | 1960 | 0.18 | 317.36 |

| 3 | 1961 | 0.18 | 317.48 |

| 4 | 1962 | -0.13 | 318.27 |

(

ggplot(df_temp_co2, aes(x="Jun", y="Trend"))

+ geom_point(color="black", size=3)

+ labs(

title="Scatterplot of temperature anomalies vs carbon dioxide emissions",

y="Carbon dioxide levels (trend, mole fraction)",

x="Temperature anomaly (degrees Celsius)",

)

)| Jun | Trend | |

|---|---|---|

| Jun | 1.000000 | 0.915419 |

| Trend | 0.915419 | 1.000000 |

(

ggplot(df_temp_co2, aes(x="Year", y="Jun"))

+ geom_line(size=1)

+ labs(

title="June temperature anomalies",

)

+ scale_x_continuous(format="d")

)base_plot = ggplot(df_temp_co2) + scale_x_continuous(format="d")

plot_p = (

base_plot

+ geom_line(aes(x="Year", y="Jun"), size=1)

+ labs(title="June temperature anomalies")

)

plot_q = (

base_plot

+ geom_line(aes(x="Year", y="Trend"), size=1)

+ labs(title="Carbon dioxide emissions")

)

gggrid([plot_p, plot_q], ncol=2)Question of part 1.3 1:whether or not you think this data is a reliable representation of the global atmosphere answer of part 1.3 1:Considering that Dave Keeling, who was the first to make accurate measurements of CO2 in the atmosphere, chose the site high up on the slopes of the Mauna Loa volcano,air there must be different from other regions.I don’t think it is a reliable representation of the global atmosphere. Question of part 1.3 2:In your own words, explain the difference between these two measures of CO2 levels. answer of part 1.3 2:The variable interpolated is always a little bit higher than the variable trend. Question of part 1.3 3:What does this chart suggest about the relationship between CO2 and time? answer of part 1.3 3:There is a strong positive association between the two variables-higher temperature anomalies are associated with higher CO2 levels. Question of part 1.3 4:Calculate and interpret the (Pearson) correlation coefficient between these two variables;discuss the shortcomings of using this coefficient to summarize the relationship between variables answer of part 1.3 4:In this case, the correlation coefficient tells us that an upward-sloping straight line is quite a good fit to the date (as seen on the scatterplot). There is a strong positive association between the two variables (higher temperature anomalies are associated with higher CO2 levels).

One limitation of this correlation measure is that it only tells us about the strength of the upward- or downward-sloping linear relationship between two variables; in other words, how closely the scatterplot aligns along an upward- or downward-sloping straight line. The correlation coefficient cannot tell us if the two variables have a different kind of relationship (such as that represented by a wavy line).

Source: Create a variable that has years 1951 to 1980, and months Jan to Dec (inclusive)practical2

Requirement already satisfied: openpyxl in c:\users\shuos\anaconda3\lib\site-packages (3.1.2)

Requirement already satisfied: et-xmlfile in c:\users\shuos\anaconda3\lib\site-packages (from openpyxl) (1.1.0)

Note: you may need to restart the kernel to use updated packages.import pandas as pd

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

from pathlib import Path

import pingouin as pg

from lets_plot import *

LetsPlot.setup_html(no_js=True)

### You don't need to use these settings yourself

### — they are just here to make the book look nicer!

# Set the plot style for prettier charts:

plt.style.use(

"https://raw.githubusercontent.com/aeturrell/core_python/main/plot_style.txt"

)# Create a dictionary with the data in

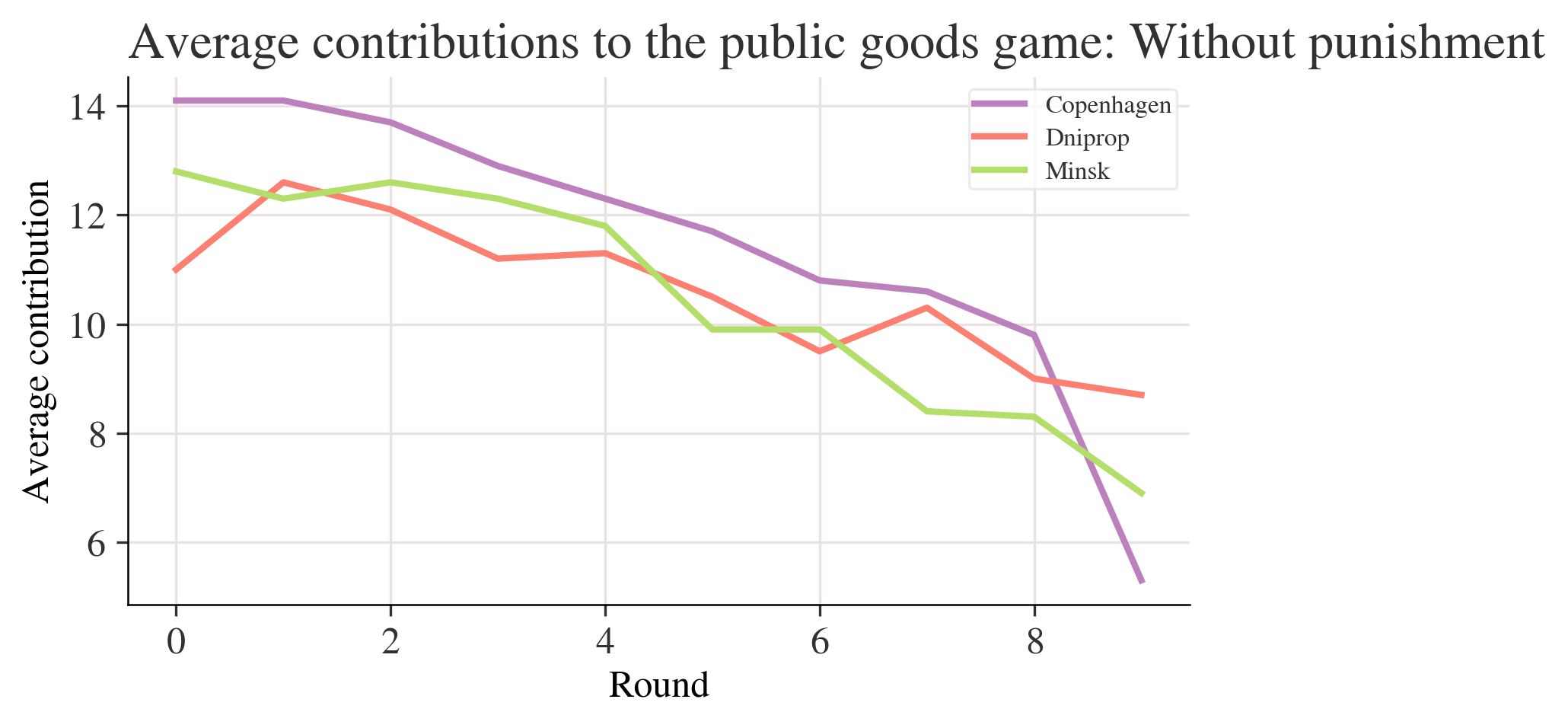

data = {

"Copenhagen": [14.1, 14.1, 13.7, 12.9, 12.3, 11.7, 10.8, 10.6, 9.8, 5.3],

"Dniprop": [11.0, 12.6, 12.1, 11.2, 11.3, 10.5, 9.5, 10.3, 9.0, 8.7],

"Minsk": [12.8, 12.3, 12.6, 12.3, 11.8, 9.9, 9.9, 8.4, 8.3, 6.9],

}

df = pd.DataFrame.from_dict(data)

df.head()| Copenhagen | Dniprop | Minsk | |

|---|---|---|---|

| 0 | 14.1 | 11.0 | 12.8 |

| 1 | 14.1 | 12.6 | 12.3 |

| 2 | 13.7 | 12.1 | 12.6 |

| 3 | 12.9 | 11.2 | 12.3 |

| 4 | 12.3 | 11.3 | 11.8 |

# Plot the data

fig, ax = plt.subplots()

df.plot(ax=ax)

ax.set_title("Average contributions to the public goods game: Without punishment")

ax.set_ylabel("Average contribution")

ax.set_xlabel("Round");

test_df=

City A City B

0 14.1 11.0

1 14.1 99.0

2 13.7 12.1

test_copy=

City A City B

0 14.1 11.0

1 14.1 12.6

2 13.7 12.1

<class 'pandas.core.frame.DataFrame'>

Index: 10 entries, 1 to 10

Data columns (total 16 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Copenhagen 10 non-null object

1 Dnipropetrovs’k 10 non-null object

2 Minsk 10 non-null object

3 St. Gallen 10 non-null object

4 Muscat 10 non-null object

5 Samara 10 non-null object

6 Zurich 10 non-null object

7 Boston 10 non-null object

8 Bonn 10 non-null object

9 Chengdu 10 non-null object

10 Seoul 10 non-null object

11 Riyadh 10 non-null object

12 Nottingham 10 non-null object

13 Athens 10 non-null object

14 Istanbul 10 non-null object

15 Melbourne 10 non-null object

dtypes: object(16)

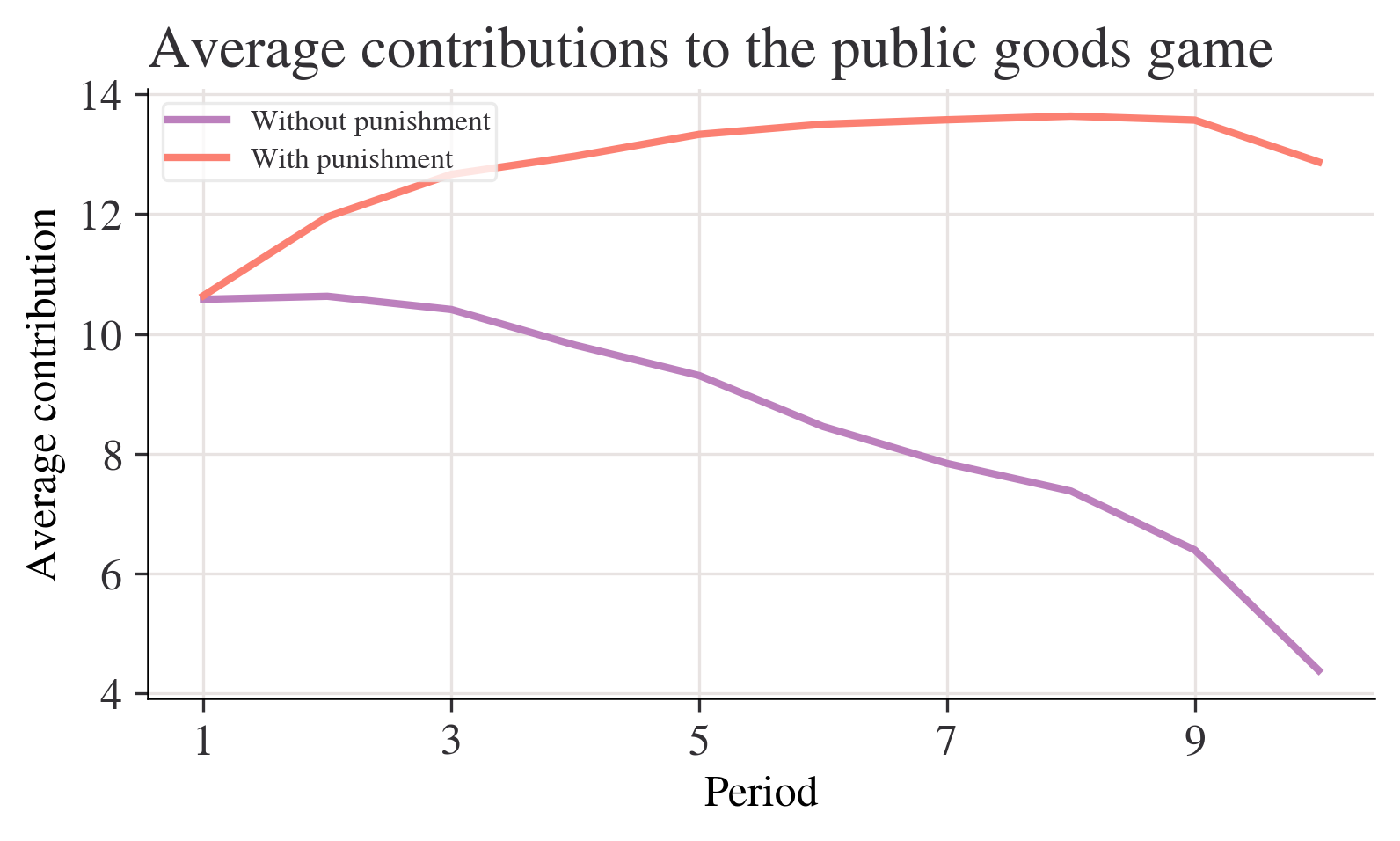

memory usage: 1.3+ KBfig, ax = plt.subplots()

mean_n_c.plot(ax=ax, label="Without punishment")

mean_p_c.plot(ax=ax, label="With punishment")

ax.set_title("Average contributions to the public goods game")

ax.set_ylabel("Average contribution")

ax.legend();

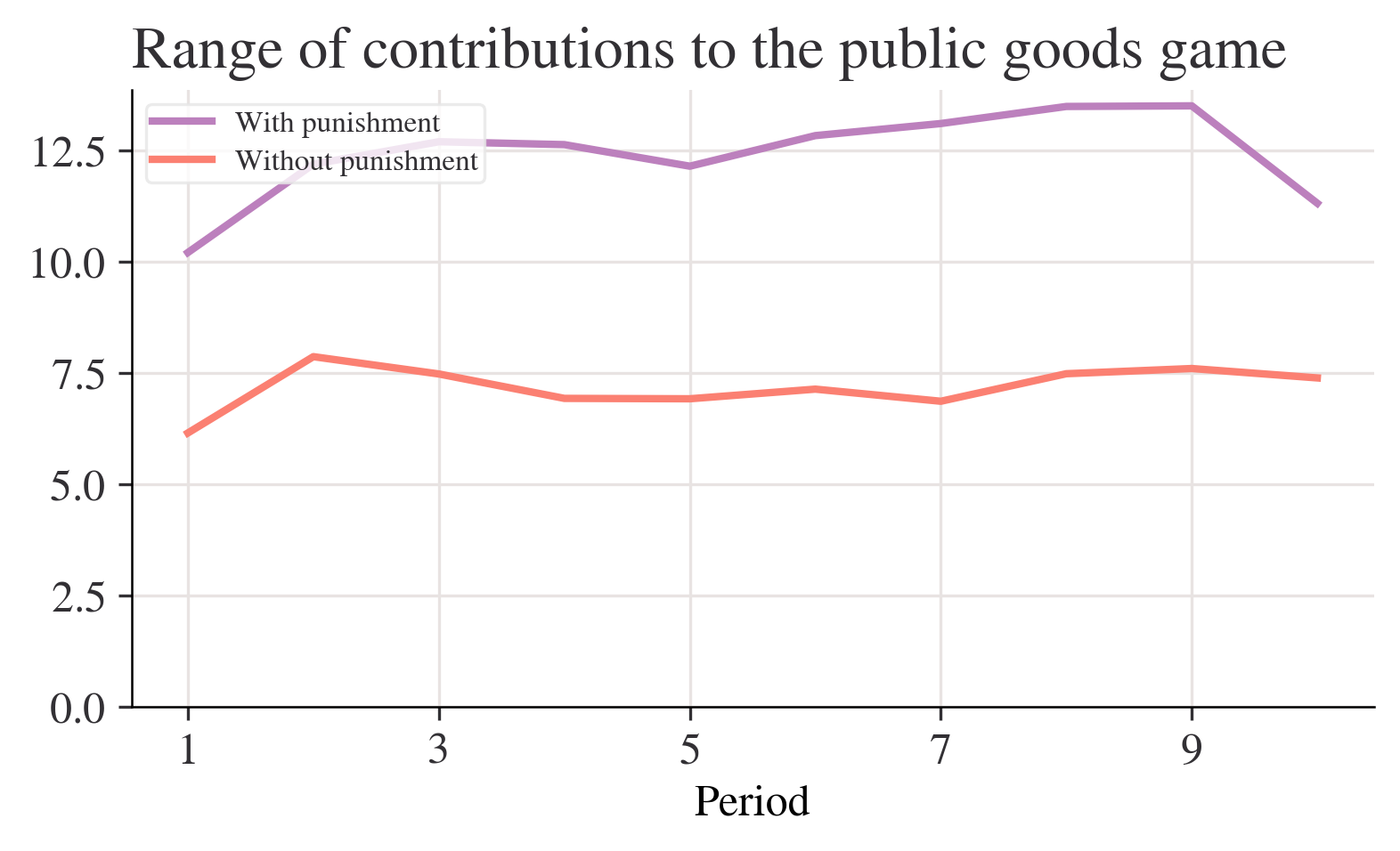

Q:Describe any differences and similarities you see in the mean contribution over time in both experiments. A:The unpunished experiments show a downward trend, and the trend for the punished experiments is generally slowly rising. Both have the fastest rate of decline in the period 9.

partial_names_list = ["F. Kennedy", "Lennon", "Maynard Keynes", "Wayne"]

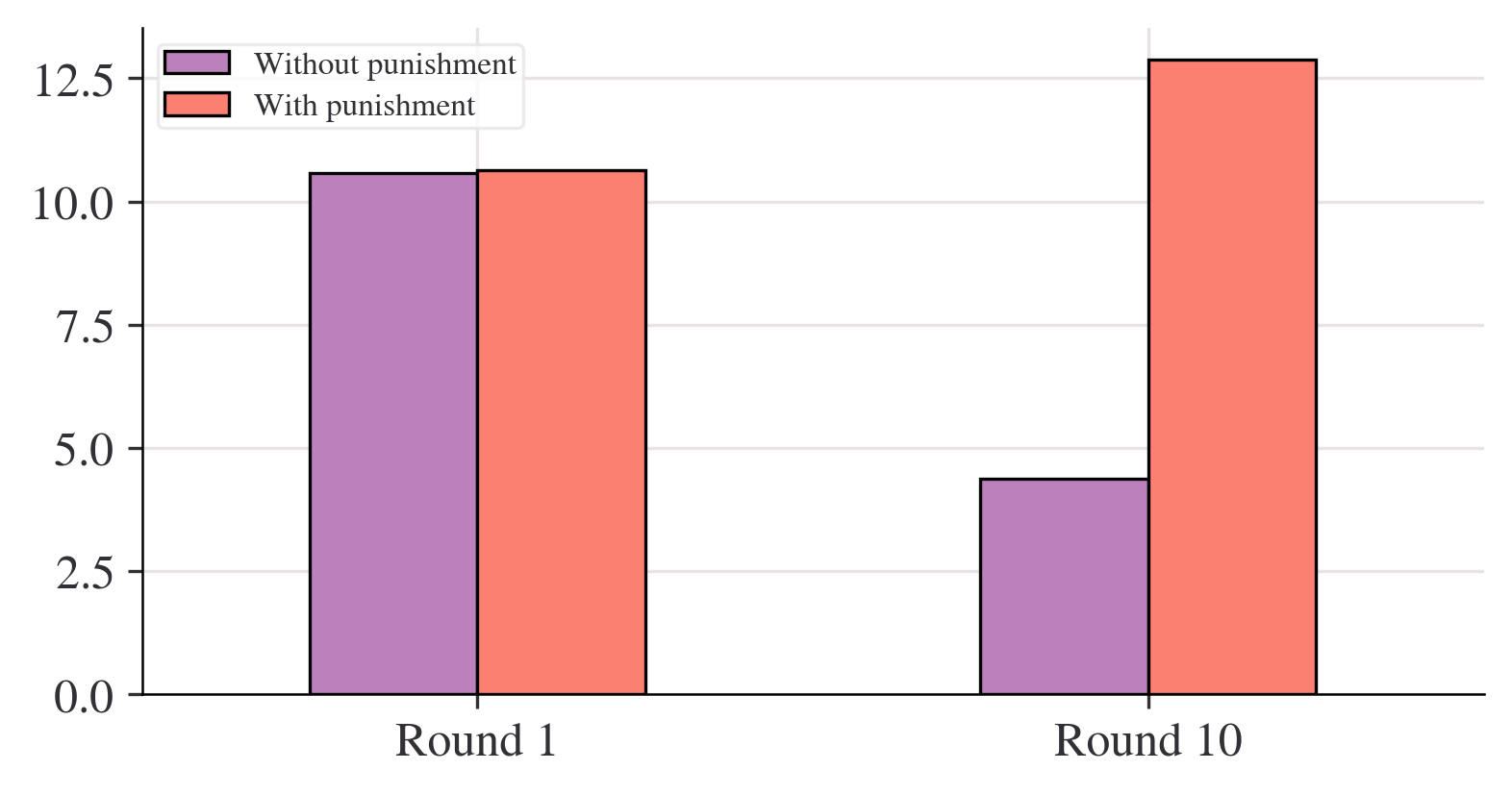

["John " + name for name in partial_names_list]['John F. Kennedy', 'John Lennon', 'John Maynard Keynes', 'John Wayne']['John F. Kennedy', 'John Lennon', 'John Maynard Keynes', 'John Wayne']# Create new dataframe with bars in

compare_grps = pd.DataFrame(

[mean_n_c.loc[[1, 10]], mean_p_c.loc[[1, 10]]],

index=["Without punishment", "With punishment"],

)

# Rename columns to have 'round' in them

compare_grps.columns = ["Round " + str(i) for i in compare_grps.columns]

# Swap the column and index variables around with the transpose function, ready for plotting (.T is transpose)

compare_grps = compare_grps.T

# Make a bar chart

compare_grps.plot.bar(rot=0);

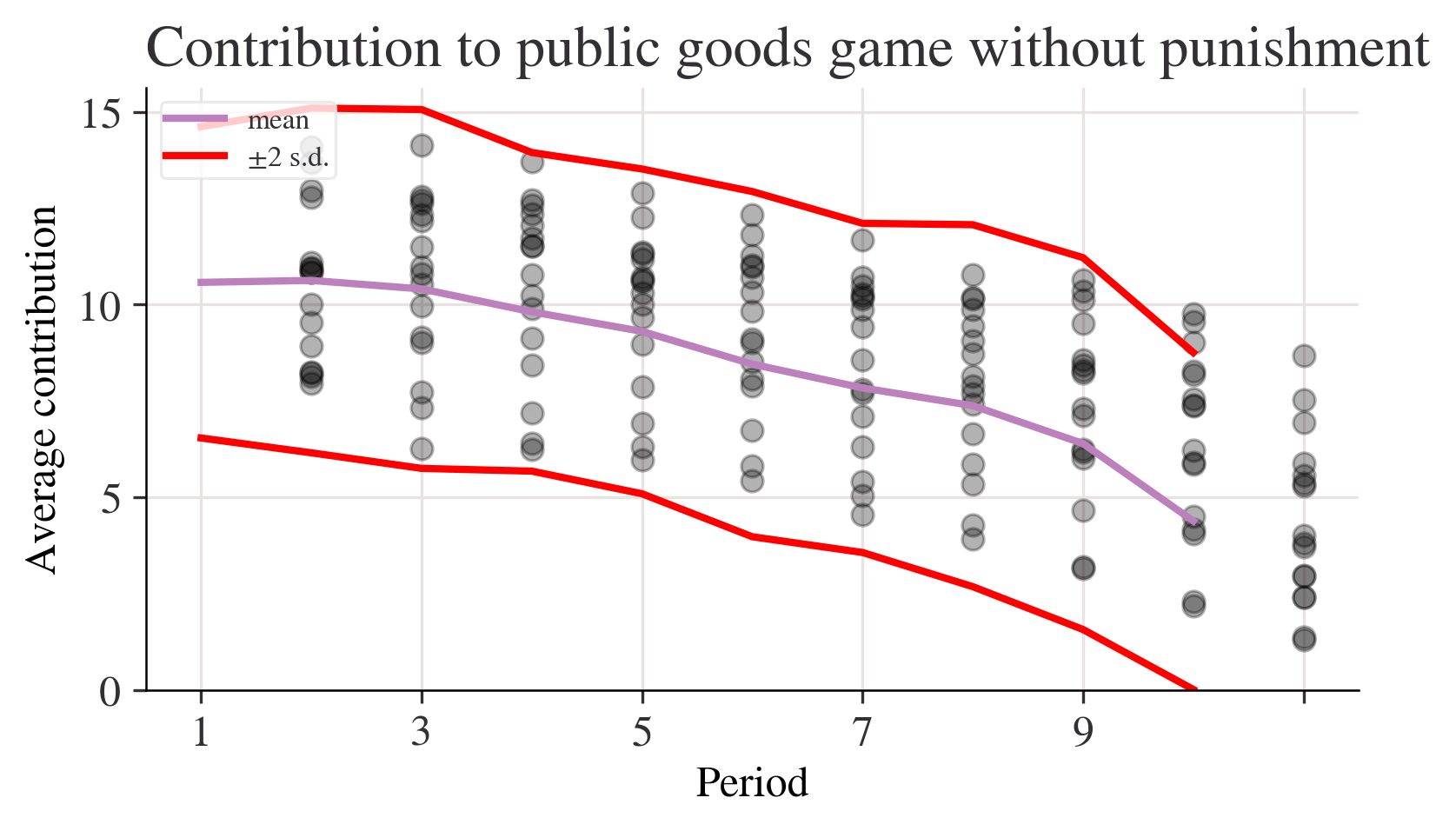

Q:Calculate the standard deviation for Periods 1 and 10 separately, for both experiments. Does the rule of thumb apply? (In other words, are most values within two standard deviations of the mean?) A:Contributions without punishment,Period 1: standard deviation about 1.82. Period 10: standard deviation is about 2.03 Contributions with punishment:Period 1: standard deviation is about 3.30. Period 10: standard deviation is about 4.67

| std | var | mean | |

|---|---|---|---|

| Period | |||

| 1 | 2.020724 | 4.083325 | 10.578313 |

| 2 | 2.238129 | 5.009220 | 10.628398 |

| 3 | 2.329569 | 5.426891 | 10.407079 |

| 4 | 2.068213 | 4.277504 | 9.813033 |

| 5 | 2.108329 | 4.445049 | 9.305433 |

| 6 | 2.240881 | 5.021549 | 8.454844 |

| 7 | 2.136614 | 4.565117 | 7.837568 |

| 8 | 2.349442 | 5.519880 | 7.376388 |

| 9 | 2.413845 | 5.826645 | 6.392985 |

| 10 | 2.187126 | 4.783520 | 4.383769 |

fig, ax = plt.subplots()

n_c["mean"].plot(ax=ax, label="mean")

# mean + 2 standard deviations

(n_c["mean"] + 2 * n_c["std"]).plot(ax=ax, ylim=(0, None), color="red", label="±2 s.d.")

# mean - 2 standard deviations

(n_c["mean"] - 2 * n_c["std"]).plot(ax=ax, ylim=(0, None), color="red", label="")

for i in range(len(data_n.columns)):

ax.scatter(x=data_n.index, y=data_n.iloc[:, i], color="k", alpha=0.3)

ax.legend()

ax.set_ylabel("Average contribution")

ax.set_title("Contribution to public goods game without punishment")

plt.show();

Period

1 10.199675

2 12.185065

3 12.689935

4 12.625000

5 12.140375

6 12.827541

7 13.098931

8 13.482621

9 13.496754

10 11.307360

dtype: float64# A lambda function accepting three inputs, a, b, and c, and calculating the sum of the squares

test_function = lambda a, b, c: a**2 + b**2 + c**2

# Now we apply the function by handing over (in parenthesis) the following inputs: a=3, b=4 and c=5

test_function(3, 4, 5)50fig, ax = plt.subplots()

range_p.plot(ax=ax, label="With punishment")

range_n.plot(ax=ax, label="Without punishment")

ax.set_ylim(0, None)

ax.legend()

ax.set_title("Range of contributions to the public goods game")

plt.show();

| range | max | min | std | mean | |

|---|---|---|---|---|---|

| Period | |||||

| 1 | 6.14 | 14.10 | 7.96 | 2.02 | 10.58 |

| 10 | 7.38 | 8.68 | 1.30 | 2.19 | 4.38 |

| range | max | min | std | mean | |

|---|---|---|---|---|---|

| Period | |||||

| 1 | 10.20 | 16.02 | 5.82 | 3.21 | 10.64 |

| 10 | 11.31 | 17.51 | 6.20 | 3.90 | 12.87 |

practice_3-1

| rownames | name | year | month | day | hour | lat | long | status | category | wind | pressure | tropicalstorm_force_diameter | hurricane_force_diameter | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | Amy | 1975 | 6 | 27 | 0 | 27.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 1 | 2 | Amy | 1975 | 6 | 27 | 6 | 28.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 2 | 3 | Amy | 1975 | 6 | 27 | 12 | 29.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 3 | 4 | Amy | 1975 | 6 | 27 | 18 | 30.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 4 | 5 | Amy | 1975 | 6 | 28 | 0 | 31.5 | -78.8 | tropical depression | NaN | 25 | 1012 | NaN | NaN |

| 5 | 6 | Amy | 1975 | 6 | 28 | 6 | 32.4 | -78.7 | tropical depression | NaN | 25 | 1012 | NaN | NaN |

| 6 | 7 | Amy | 1975 | 6 | 28 | 12 | 33.3 | -78.0 | tropical depression | NaN | 25 | 1011 | NaN | NaN |

| 7 | 8 | Amy | 1975 | 6 | 28 | 18 | 34.0 | -77.0 | tropical depression | NaN | 30 | 1006 | NaN | NaN |

| 8 | 9 | Amy | 1975 | 6 | 29 | 0 | 34.4 | -75.8 | tropical storm | NaN | 35 | 1004 | NaN | NaN |

| 9 | 10 | Amy | 1975 | 6 | 29 | 6 | 34.0 | -74.8 | tropical storm | NaN | 40 | 1002 | NaN | NaN |

'<!DOCTYPE html>\n<html xmlns="http://www.w3.org/1999/xhtml" lang="en" xml:lang="en"><head>\n\n<meta charset="utf-8">\n<meta name="generator" content="quarto-1.6.39">\n\n<meta name="viewport" content="width=device-width, initial-scale=1.0, user-scalable=yes">\n\n<meta name="author" content="Arthur Turrell">\n'TJDdmFjYW5jaWVzJTJDQ09WSUQtMTk=" data-index="1" data-listing-date-modified-sort="NaN" data-listing-date-sort="1651359600000" data-listing-file-modified-sort="1687564711698" data-listing-reading-time-sort="1" data-listing-word-count-sort="182">

<div class="project-content listing-pub-info">

<p>

Draca, Mirko, Emma Duchini, Roland Rathelot, Arthur Turrell, and Giulia Vattuone. Revolution in Progress? The Rise of Remote Work in the UK.

<i>

Univers<p>Blundell, Jack, Emma Duchini, Stefania Simion, and Arthur Turrell. "Pay transparency and gender equality." <i>American Economic Journal: Economic Policy</i> (2024). doi: <a href="https://www.aeaweb.org/articles?id=10.1257/pol.20220766&from=f"><code>10.1257/pol.20220766</code></a></p>'Blundell, Jack, Emma Duchini, Stefania Simion, and Arthur Turrell. "Pay transparency and gender equality." American Economic Journal: Economic Policy (2024). doi: 10.1257/pol.20220766'projects = soup.find_all("div", class_="project-content listing-pub-info")

projects = [x.text.strip() for x in projects]

projects[:4]['Blundell, Jack, Emma Duchini, Stefania Simion, and Arthur Turrell. "Pay transparency and gender equality." American Economic Journal: Economic Policy (2024). doi: 10.1257/pol.20220766',

'Botta, Federico, Robin Lovelace, Laura Gilbert, and Arthur Turrell. "Packaging code and data for reproducible research: A case study of journey time statistics." Environment and Planning B: Urban Analytics and City Science (2024): 23998083241267331. doi: 10.1177/23998083241267331',

'Kalamara, Eleni, Arthur Turrell, Chris Redl, George Kapetanios, and Sujit Kapadia. "Making text count: economic forecasting using newspaper text." Journal of Applied Econometrics 37, no. 5 (2022): 896-919. doi: 10.1002/jae.2907',

'Turrell, A., Speigner, B., Copple, D., Djumalieva, J. and Thurgood, J., 2021. Is the UK’s productivity puzzle mostly driven by occupational mismatch? An analysis using big data on job vacancies. Labour Economics, 71, p.102013. doi: 10.1016/j.labeco.2021.102013']df_list = pd.read_html(

"https://simple.wikipedia.org/wiki/FIFA_World_Cup", match="Sweden"

)

# Retrieve first and only entry from list of dataframes

df = df_list[0]

df.head()| Years | Hosts | Winners | Score | Runner's-up | Third place | Score.1 | Fourth place | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1930 Details | Uruguay | Uruguay | 4 - 2 | Argentina | United States | [note 1] | Yugoslavia |

| 1 | 1934 Details | Italy | Italy | 2 - 1 | Czechoslovakia | Germany | 3 - 2 | Austria |

| 2 | 1938 Details | France | Italy | 4 - 2 | Hungary | Brazil | 4 - 2 | Sweden |

| 3 | 1950 Details | Brazil | Uruguay | 2 - 1 | Brazil | Sweden | [note 2] | Spain |

| 4 | 1954 Details | Switzerland | West Germany | 3 - 2 | Hungary | Austria | 3 - 1 | Uruguay |

practice_3-2

Requirement already satisfied: requests in c:\users\shuos\anaconda3\lib\site-packages (2.32.2)

Requirement already satisfied: charset-normalizer<4,>=2 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2024.8.30)

Note: you may need to restart the kernel to use updated packages.Collecting html5libNote: you may need to restart the kernel to use updated packages.

Downloading html5lib-1.1-py2.py3-none-any.whl.metadata (16 kB)

Requirement already satisfied: six>=1.9 in c:\users\shuos\anaconda3\lib\site-packages (from html5lib) (1.16.0)

Requirement already satisfied: webencodings in c:\users\shuos\anaconda3\lib\site-packages (from html5lib) (0.5.1)

Downloading html5lib-1.1-py2.py3-none-any.whl (112 kB)

---------------------------------------- 0.0/112.2 kB ? eta -:--:--

--- ------------------------------------ 10.2/112.2 kB ? eta -:--:--

------------------------ -------------- 71.7/112.2 kB 975.2 kB/s eta 0:00:01

-------------------------------------- 112.2/112.2 kB 926.7 kB/s eta 0:00:00

Installing collected packages: html5lib

Successfully installed html5lib-1.1Requirement already satisfied: bs4 in c:\users\shuos\anaconda3\lib\site-packages (0.0.2)

Requirement already satisfied: beautifulsoup4 in c:\users\shuos\anaconda3\lib\site-packages (from bs4) (4.12.3)

Requirement already satisfied: soupsieve>1.2 in c:\users\shuos\anaconda3\lib\site-packages (from beautifulsoup4->bs4) (2.5)

Note: you may need to restart the kernel to use updated packages.Requirement already satisfied: pandas in c:\users\shuos\anaconda3\lib\site-packages (2.2.2)

Requirement already satisfied: numpy>=1.26.0 in c:\users\shuos\anaconda3\lib\site-packages (from pandas) (1.26.4)

Requirement already satisfied: python-dateutil>=2.8.2 in c:\users\shuos\anaconda3\lib\site-packages (from pandas) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in c:\users\shuos\anaconda3\lib\site-packages (from pandas) (2024.1)

Requirement already satisfied: tzdata>=2022.7 in c:\users\shuos\anaconda3\lib\site-packages (from pandas) (2023.3)

Requirement already satisfied: six>=1.5 in c:\users\shuos\anaconda3\lib\site-packages (from python-dateutil>=2.8.2->pandas) (1.16.0)

Note: you may need to restart the kernel to use updated packages.# create a empty list for storing

# movie information

list = []

# Iterating over movies to extract

# each movie's details

for index in range(0, len(movies)):

# Separating movie into: 'place',

# 'title', 'year'

movie_string = movies[index].get_text()

movie = (' '.join(movie_string.split()).replace('.', ''))

movie_title = movie[len(str(index))+1:-7]

year = re.search('\((.*?)\)', movie_string).group(1)

place = movie[:len(str(index))-(len(movie))]

data = {"place": place,

"movie_title": movie_title,

"rating": ratings[index],

"year": year,

"star_cast": crew[index],

}

list.append(data)from bs4 import BeautifulSoup

import requests

import re

import pandas as pd

# Downloading imdb top 250 movie's data

url = 'http://www.imdb.com/chart/top'

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

movies = soup.select('td.titleColumn')

crew = [a.attrs.get('title') for a in soup.select('td.titleColumn a')]

ratings = [b.attrs.get('data-value')

for b in soup.select('td.posterColumn span[name=ir]')]

# create a empty list for storing

# movie information

list = []

# Iterating over movies to extract

# each movie's details

for index in range(0, len(movies)):

# Separating movie into: 'place',

# 'title', 'year'

movie_string = movies[index].get_text()

movie = (' '.join(movie_string.split()).replace('.', ''))

movie_title = movie[len(str(index))+1:-7]

year = re.search('\((.*?)\)', movie_string).group(1)

place = movie[:len(str(index))-(len(movie))]

data = {"place": place,

"movie_title": movie_title,

"rating": ratings[index],

"year": year,

"star_cast": crew[index],

}

list.append(data)

# printing movie details with its rating.

for movie in list:

print(movie['place'], '-', movie['movie_title'], '('+movie['year'] +

') -', 'Starring:', movie['star_cast'], movie['rating'])

##.......##

df = pd.DataFrame(list)

df.to_csv('imdb_top_250_movies.csv',index=False)practice_3-3

Requirement already satisfied: requests in c:\users\shuos\anaconda3\lib\site-packages (2.32.2)

Requirement already satisfied: beautifulsoup4 in c:\users\shuos\anaconda3\lib\site-packages (4.12.3)

Requirement already satisfied: charset-normalizer<4,>=2 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2024.8.30)

Requirement already satisfied: soupsieve>1.2 in c:\users\shuos\anaconda3\lib\site-packages (from beautifulsoup4) (2.5)

Note: you may need to restart the kernel to use updated packages.import requests

# 定义请求的 URL 和 headers

url = "https://movie.douban.com/top250"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

# 发送 GET 请求

response = requests.get(url, headers=headers)

response.encoding = 'utf-8' # 设置编码方式

html_content = response.text # 获取网页的 HTML 内容

print("网页内容加载成功!")网页内容加载成功!from bs4 import BeautifulSoup

# 使用 Beautiful Soup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

# 提取电影名称、描述、评分和评价人数

movies = []

for item in soup.find_all('div', class_='item'):

title = item.find('span', class_='title').get_text() # 电影名称

description = item.find('span', class_='inq') # 电影描述

rating = item.find('span', class_='rating_num').get_text() # 评分

votes = item.find('div', class_='star').find_all('span')[3].get_text() # 评价人数

# 如果没有描述,将其置为空字符串

if description:

description = description.get_text()

else:

description = ''

movie = {

"title": title,

"description": description,

"rating": rating,

"votes": votes.replace('人评价', '').strip()

}

movies.append(movie)

print("数据提取成功!")数据提取成功!import csv

# 将数据保存到 CSV 文件

with open('douban_top250.csv', 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['title', 'description', 'rating', 'votes']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader() # 写入表头

for movie in movies:

writer.writerow(movie) # 写入每一行数据

print("数据已成功保存到 douban_top250.csv")数据已成功保存到 douban_top250.csvimport requests

from bs4 import BeautifulSoup

import csv

# 定义请求的 URL 和 headers

url = "https://movie.douban.com/top250"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

# 发送 GET 请求

response = requests.get(url, headers=headers)

response.encoding = 'utf-8' # 设置编码方式

html_content = response.text # 获取网页的 HTML 内容

# 使用 Beautiful Soup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

# 提取电影名称、描述、评分和评价人数

movies = []

for item in soup.find_all('div', class_='item'):

title = item.find('span', class_='title').get_text() # 电影名称

description = item.find('span', class_='inq') # 电影描述

rating = item.find('span', class_='rating_num').get_text() # 评分

votes = item.find('div', class_='star').find_all('span')[3].get_text() # 评价人数

# 如果没有描述,将其置为空字符串

if description:

description = description.get_text()

else:

description = ''

movie = {

"title": title,

"description": description,

"rating": rating,

"votes": votes.replace('人评价', '').strip()

}

movies.append(movie)

# 将数据保存到 CSV 文件

with open('douban_top250.csv', 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['title', 'description', 'rating', 'votes']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader() # 写入表头

for movie in movies:

writer.writerow(movie) # 写入每一行数据

print("数据已成功保存到 douban_top250.csv")数据已成功保存到 douban_top250.csvpractical_3

Requirement already satisfied: pandas-datareader in c:\users\shuos\anaconda3\lib\site-packages (0.10.0)Note: you may need to restart the kernel to use updated packages.

Requirement already satisfied: lxml in c:\users\shuos\anaconda3\lib\site-packages (from pandas-datareader) (5.2.1)

Requirement already satisfied: pandas>=0.23 in c:\users\shuos\anaconda3\lib\site-packages (from pandas-datareader) (2.2.2)

Requirement already satisfied: requests>=2.19.0 in c:\users\shuos\anaconda3\lib\site-packages (from pandas-datareader) (2.32.2)

Requirement already satisfied: numpy>=1.26.0 in c:\users\shuos\anaconda3\lib\site-packages (from pandas>=0.23->pandas-datareader) (1.26.4)

Requirement already satisfied: python-dateutil>=2.8.2 in c:\users\shuos\anaconda3\lib\site-packages (from pandas>=0.23->pandas-datareader) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in c:\users\shuos\anaconda3\lib\site-packages (from pandas>=0.23->pandas-datareader) (2024.1)

Requirement already satisfied: tzdata>=2022.7 in c:\users\shuos\anaconda3\lib\site-packages (from pandas>=0.23->pandas-datareader) (2023.3)

Requirement already satisfied: charset-normalizer<4,>=2 in c:\users\shuos\anaconda3\lib\site-packages (from requests>=2.19.0->pandas-datareader) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in c:\users\shuos\anaconda3\lib\site-packages (from requests>=2.19.0->pandas-datareader) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\shuos\anaconda3\lib\site-packages (from requests>=2.19.0->pandas-datareader) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\shuos\anaconda3\lib\site-packages (from requests>=2.19.0->pandas-datareader) (2024.8.30)

Requirement already satisfied: six>=1.5 in c:\users\shuos\anaconda3\lib\site-packages (from python-dateutil>=2.8.2->pandas>=0.23->pandas-datareader) (1.16.0)Collecting BeautifulSoupNote: you may need to restart the kernel to use updated packages.

Using cached BeautifulSoup-3.2.2.tar.gz (32 kB)

Preparing metadata (setup.py): started

Preparing metadata (setup.py): finished with status 'error'Requirement already satisfied: bs4 in c:\users\shuos\anaconda3\lib\site-packages (0.0.2)

Requirement already satisfied: beautifulsoup4 in c:\users\shuos\anaconda3\lib\site-packages (from bs4) (4.12.3)

Requirement already satisfied: soupsieve>1.2 in c:\users\shuos\anaconda3\lib\site-packages (from beautifulsoup4->bs4) (2.5)

Note: you may need to restart the kernel to use updated packages.| rownames | name | year | month | day | hour | lat | long | status | category | wind | pressure | tropicalstorm_force_diameter | hurricane_force_diameter | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | Amy | 1975 | 6 | 27 | 0 | 27.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 1 | 2 | Amy | 1975 | 6 | 27 | 6 | 28.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 2 | 3 | Amy | 1975 | 6 | 27 | 12 | 29.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 3 | 4 | Amy | 1975 | 6 | 27 | 18 | 30.5 | -79.0 | tropical depression | NaN | 25 | 1013 | NaN | NaN |

| 4 | 5 | Amy | 1975 | 6 | 28 | 0 | 31.5 | -78.8 | tropical depression | NaN | 25 | 1012 | NaN | NaN |

| 5 | 6 | Amy | 1975 | 6 | 28 | 6 | 32.4 | -78.7 | tropical depression | NaN | 25 | 1012 | NaN | NaN |

| 6 | 7 | Amy | 1975 | 6 | 28 | 12 | 33.3 | -78.0 | tropical depression | NaN | 25 | 1011 | NaN | NaN |

| 7 | 8 | Amy | 1975 | 6 | 28 | 18 | 34.0 | -77.0 | tropical depression | NaN | 30 | 1006 | NaN | NaN |

| 8 | 9 | Amy | 1975 | 6 | 29 | 0 | 34.4 | -75.8 | tropical storm | NaN | 35 | 1004 | NaN | NaN |

| 9 | 10 | Amy | 1975 | 6 | 29 | 6 | 34.0 | -74.8 | tropical storm | NaN | 40 | 1002 | NaN | NaN |

'<!DOCTYPE html>\n<html xmlns="http://www.w3.org/1999/xhtml" lang="en" xml:lang="en"><head>\n\n<meta charset="utf-8">\n<meta name="generator" content="quarto-1.5.56">\n\n<meta name="viewport" content="width=device-width, initial-scale=1.0, user-scalable=yes">\n\n<meta name="author" content="Arthur Turrell">\n' </div>

<div class="project-category">

<a href="#category=gender pay gap">

gender pay gap

</a>

</div>

<div class="project-category">

<a href="#category=labour">

labour

</a>

</div>

<div class="project-category">

<a href="#category=text analysis">

text analysis

</a>

</div>

</div>

<div class="project-details-listing<p>Botta, Federico, Robin Lovelace, Laura Gilbert, and Arthur Turrell. "Packaging code and data for reproducible research: A case study of journey time statistics." <i>Environment and Planning B: Urban Analytics and City Science</i> (2024): 23998083241267331. doi: <a href="https://doi.org/10.1177/23998083241267331"><code>10.1177/23998083241267331</code></a></p>'Botta, Federico, Robin Lovelace, Laura Gilbert, and Arthur Turrell. "Packaging code and data for reproducible research: A case study of journey time statistics." Environment and Planning B: Urban Analytics and City Science (2024): 23998083241267331. doi: 10.1177/23998083241267331'projects = soup.find_all("div", class_="project-content listing-pub-info")

projects = [x.text.strip() for x in projects]

projects[:4]['Botta, Federico, Robin Lovelace, Laura Gilbert, and Arthur Turrell. "Packaging code and data for reproducible research: A case study of journey time statistics." Environment and Planning B: Urban Analytics and City Science (2024): 23998083241267331. doi: 10.1177/23998083241267331',

'Kalamara, Eleni, Arthur Turrell, Chris Redl, George Kapetanios, and Sujit Kapadia. "Making text count: economic forecasting using newspaper text." Journal of Applied Econometrics 37, no. 5 (2022): 896-919. doi: 10.1002/jae.2907',

'Turrell, A., Speigner, B., Copple, D., Djumalieva, J. and Thurgood, J., 2021. Is the UK’s productivity puzzle mostly driven by occupational mismatch? An analysis using big data on job vacancies. Labour Economics, 71, p.102013. doi: 10.1016/j.labeco.2021.102013',

'Haldane, Andrew G., and Arthur E. Turrell. "Drawing on different disciplines: macroeconomic agent-based models." Journal of Evolutionary Economics 29 (2019): 39-66. doi: 10.1007/s00191-018-0557-5']df_list = pd.read_html(

"https://simple.wikipedia.org/wiki/FIFA_World_Cup", match="Sweden"

)

# Retrieve first and only entry from list of dataframes

df = df_list[0]

df.head()| Years | Hosts | Winners | Score | Runner's-up | Third place | Score.1 | Fourth place | |

|---|---|---|---|---|---|---|---|---|

| 0 | 1930 Details | Uruguay | Uruguay | 4 - 2 | Argentina | United States | [note 1] | Yugoslavia |

| 1 | 1934 Details | Italy | Italy | 2 - 1 | Czechoslovakia | Germany | 3 - 2 | Austria |

| 2 | 1938 Details | France | Italy | 4 - 2 | Hungary | Brazil | 4 - 2 | Sweden |

| 3 | 1950 Details | Brazil | Uruguay | 2 - 1 | Brazil | Sweden | [note 2] | Spain |

| 4 | 1954 Details | Switzerland | West Germany | 3 - 2 | Hungary | Austria | 3 - 1 | Uruguay |

Collecting pdftotext

Using cached pdftotext-2.2.2.tar.gz (113 kB)

Preparing metadata (setup.py): started

Preparing metadata (setup.py): finished with status 'done'

Building wheels for collected packages: pdftotext

Building wheel for pdftotext (setup.py): started

Building wheel for pdftotext (setup.py): finished with status 'error'

Running setup.py clean for pdftotext

Failed to build pdftotext

Note: you may need to restart the kernel to use updated packages.# create a empty list for storing

# movie information

list = []

# Iterating over movies to extract

# each movie's details

for index in range(0, len(movies)):

# Separating movie into: 'place',

# 'title', 'year'

movie_string = movies[index].get_text()

movie = (' '.join(movie_string.split()).replace('.', ''))

movie_title = movie[len(str(index))+1:-7]

year = re.search('\((.*?)\)', movie_string).group(1)

place = movie[:len(str(index))-(len(movie))]

data = {"place": place,

"movie_title": movie_title,

"rating": ratings[index],

"year": year,

"star_cast": crew[index],

}

list.append(data)from bs4 import BeautifulSoup

import requests

import re

import pandas as pd

# Downloading imdb top 250 movie's data

url = 'http://www.imdb.com/chart/top'

response = requests.get(url)

soup = BeautifulSoup(response.text, "html.parser")

movies = soup.select('td.titleColumn')

crew = [a.attrs.get('title') for a in soup.select('td.titleColumn a')]

ratings = [b.attrs.get('data-value')

for b in soup.select('td.posterColumn span[name=ir]')]

# create a empty list for storing

# movie information

list = []

# Iterating over movies to extract

# each movie's details

for index in range(0, len(movies)):

# Separating movie into: 'place',

# 'title', 'year'

movie_string = movies[index].get_text()

movie = (' '.join(movie_string.split()).replace('.', ''))

movie_title = movie[len(str(index))+1:-7]

year = re.search('\((.*?)\)', movie_string).group(1)

place = movie[:len(str(index))-(len(movie))]

data = {"place": place,

"movie_title": movie_title,

"rating": ratings[index],

"year": year,

"star_cast": crew[index],

}

list.append(data)

# printing movie details with its rating.

for movie in list:

print(movie['place'], '-', movie['movie_title'], '('+movie['year'] +

') -', 'Starring:', movie['star_cast'], movie['rating'])

##.......##

df = pd.DataFrame(list)

df.to_csv('imdb_top_250_movies.csv',index=False)Requirement already satisfied: requests in c:\users\shuos\anaconda3\lib\site-packages (2.32.2)

Requirement already satisfied: charset-normalizer<4,>=2 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.0.4)

Requirement already satisfied: idna<4,>=2.5 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (3.7)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2.2.2)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\shuos\anaconda3\lib\site-packages (from requests) (2024.8.30)

Note: you may need to restart the kernel to use updated packages.Requirement already satisfied: beautifulsoup4 in c:\users\shuos\anaconda3\lib\site-packages (4.12.3)

Requirement already satisfied: soupsieve>1.2 in c:\users\shuos\anaconda3\lib\site-packages (from beautifulsoup4) (2.5)

Note: you may need to restart the kernel to use updated packages.import requests

# 定义请求的 URL 和 headers

url = "https://movie.douban.com/top250"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

# 发送 GET 请求

response = requests.get(url, headers=headers)

response.encoding = 'utf-8' # 设置编码方式

html_content = response.text # 获取网页的 HTML 内容

print("网页内容加载成功!")网页内容加载成功!from bs4 import BeautifulSoup

# 使用 Beautiful Soup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

# 提取电影名称、描述、评分和评价人数

movies = []

for item in soup.find_all('div', class_='item'):

title = item.find('span', class_='title').get_text() # 电影名称

description = item.find('span', class_='inq') # 电影描述

rating = item.find('span', class_='rating_num').get_text() # 评分

votes = item.find('div', class_='star').find_all('span')[3].get_text() # 评价人数

# 如果没有描述,将其置为空字符串

if description:

description = description.get_text()

else:

description = ''

movie = {

"title": title,

"description": description,

"rating": rating,

"votes": votes.replace('人评价', '').strip()

}

movies.append(movie)

print("数据提取成功!")数据提取成功!import csv

# 将数据保存到 CSV 文件

with open('douban_top250.csv', 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['title', 'description', 'rating', 'votes']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader() # 写入表头

for movie in movies:

writer.writerow(movie) # 写入每一行数据

print("数据已成功保存到 douban_top250.csv")数据已成功保存到 douban_top250.csvimport requests

from bs4 import BeautifulSoup

import csv

# 定义请求的 URL 和 headers

url = "https://movie.douban.com/top250"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

# 发送 GET 请求

response = requests.get(url, headers=headers)

response.encoding = 'utf-8' # 设置编码方式

html_content = response.text # 获取网页的 HTML 内容

# 使用 Beautiful Soup 解析 HTML

soup = BeautifulSoup(html_content, 'html.parser')

# 提取电影名称、描述、评分和评价人数

movies = []

for item in soup.find_all('div', class_='item'):

title = item.find('span', class_='title').get_text() # 电影名称

description = item.find('span', class_='inq') # 电影描述

rating = item.find('span', class_='rating_num').get_text() # 评分

votes = item.find('div', class_='star').find_all('span')[3].get_text() # 评价人数

# 如果没有描述,将其置为空字符串

if description:

description = description.get_text()

else:

description = ''

movie = {

"title": title,

"description": description,

"rating": rating,

"votes": votes.replace('人评价', '').strip()

}

movies.append(movie)

# 将数据保存到 CSV 文件

with open('douban_top250.csv', 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['title', 'description', 'rating', 'votes']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader() # 写入表头

for movie in movies:

writer.writerow(movie) # 写入每一行数据

print("数据已成功保存到 douban_top250.csv")数据已成功保存到 douban_top250.csv4.IMDb_and_Douban_top-250_movie_datasets

# Regular expressions to extract information

findLink = re.compile(r'<a href="(.*?)">') # detail link

findImgSrc = re.compile(r'<img.*src="(.*?)"', re.S) # image link

findTitle = re.compile(r'<span class="title">(.*)</span>') # movie title

findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>') # rating

findJudge = re.compile(r'<span>(\d*)人评价</span>') # number of reviews

findInq = re.compile(r'<span class="inq">(.*)</span>') # summary

findBd = re.compile(r'<p class="">(.*?)</p>', re.S) # additional infoimport pandas as pd

import matplotlib.pyplot as plt

# Load datasets

douban_file_path = 'douban_top250.csv'

imdb_file_path = 'IMDB Top 250 Movies.csv'

douban_data = pd.read_csv(douban_file_path, encoding='utf-8', on_bad_lines='skip')

imdb_data = pd.read_csv(imdb_file_path, encoding='utf-8', on_bad_lines='skip')

# Renaming columns for clarity and merging compatibility

douban_data.rename(columns={

'影片中文名': 'Title',

'评分': 'Douban_Score',

'评价数': 'Douban_Reviews',

'相关信息': 'Douban_Info'

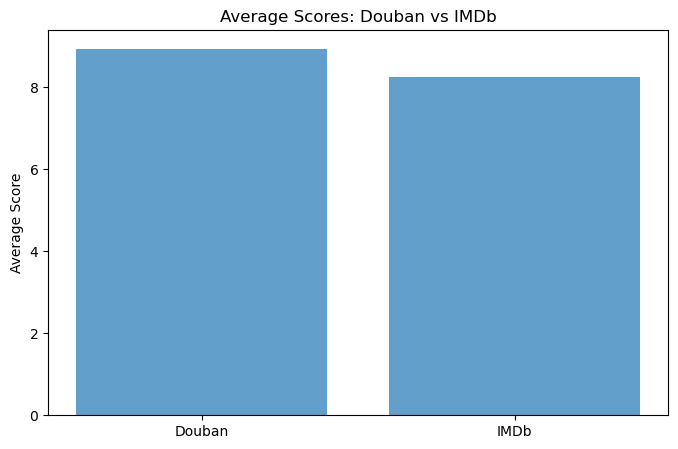

}, inplace=True)# Calculate average scores for both platforms

douban_avg_score = douban_data['Douban_Score'].mean()

imdb_avg_score = imdb_data['IMDB_Score'].mean()

# Find overlapping movies by title

overlap_movies = pd.merge(douban_data, imdb_data, on='Title')

# Visualize average scores

plt.figure(figsize=(8, 5))

plt.bar(['Douban', 'IMDb'], [douban_avg_score, imdb_avg_score], alpha=0.7)

plt.title('Average Scores: Douban vs IMDb')

plt.ylabel('Average Score')

plt.show()

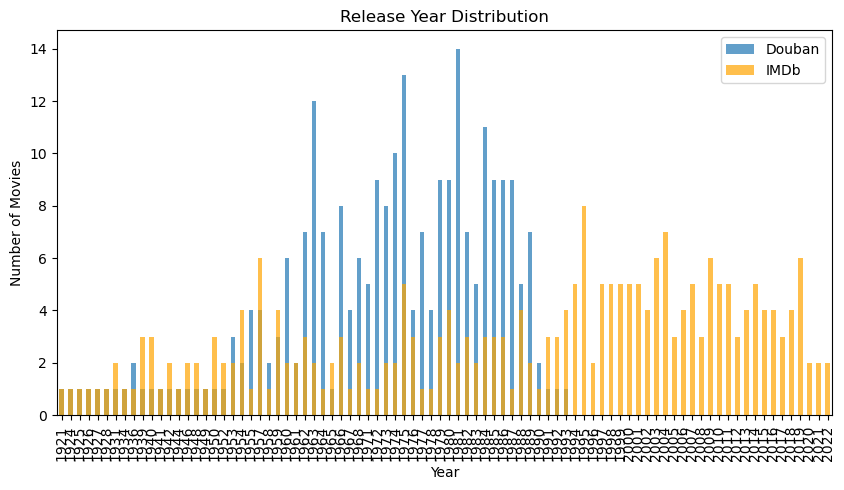

# Analyze release year distribution

plt.figure(figsize=(10, 5))

douban_data['Douban_Info'] = douban_data['Douban_Info'].astype(str)

douban_years = douban_data['Douban_Info'].str.extract(r'(\d{4})').dropna()

douban_years = douban_years[0].astype(int).value_counts().sort_index()

imdb_years = imdb_data['Release_Year'].value_counts().sort_index()

douban_years.plot(kind='bar', alpha=0.7, label='Douban', figsize=(10, 5))

imdb_years.plot(kind='bar', alpha=0.7, label='IMDb', color='orange')

plt.title('Release Year Distribution')

plt.xlabel('Year')

plt.ylabel('Number of Movies')

plt.legend()

plt.show()

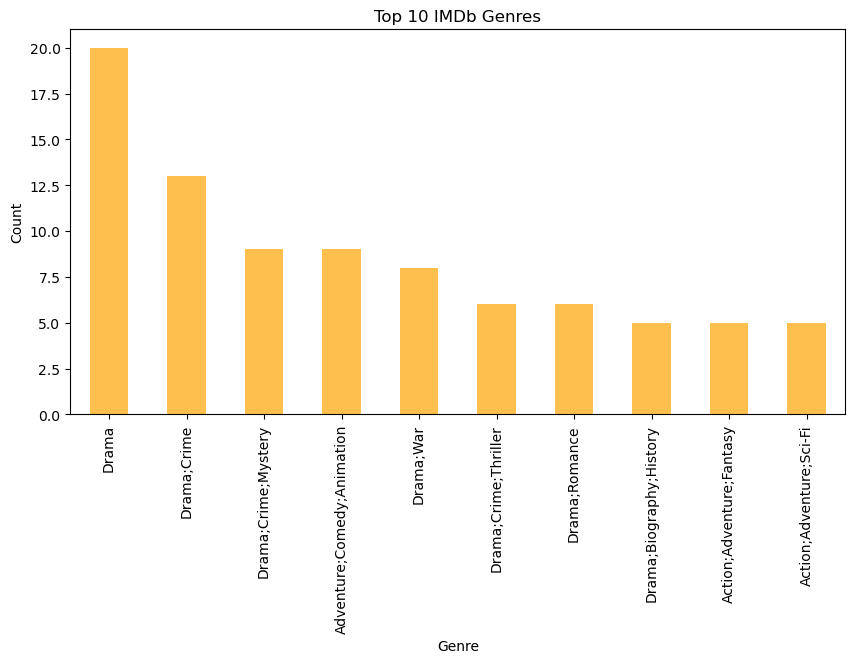

# Analyze genre distribution

imdb_genres = imdb_data['IMDB_Genre'].str.split(',').explode().str.strip().value_counts()

plt.figure(figsize=(10, 5))

imdb_genres.head(10).plot(kind='bar', alpha=0.7, color='orange')

plt.title('Top 10 IMDb Genres')

plt.xlabel('Genre')

plt.ylabel('Count')

plt.show()

# Top directors by movie count

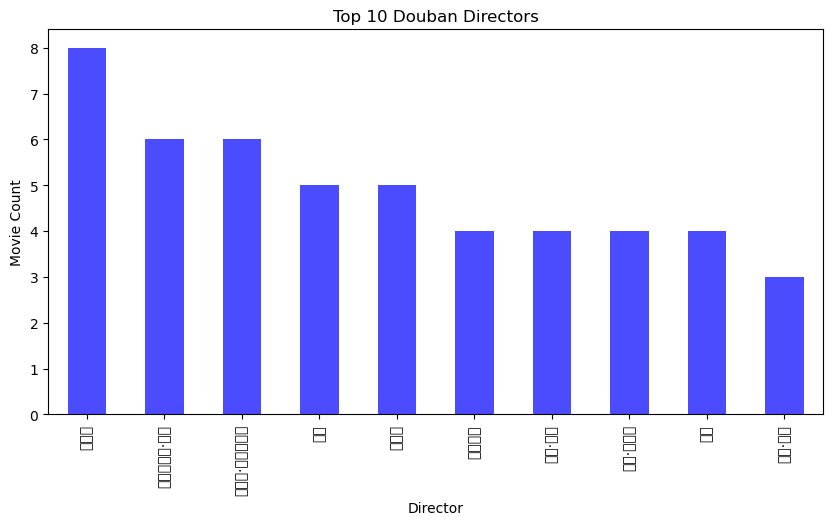

douban_directors = douban_data['Douban_Info'].str.extract(r'导演: (.+?) ').dropna()

douban_top_directors = douban_directors[0].value_counts().head(10)

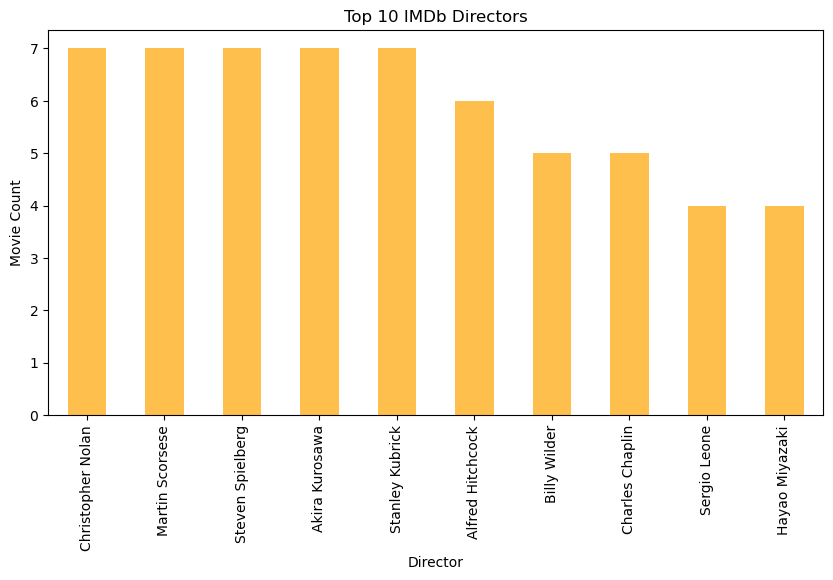

imdb_top_directors = imdb_data['IMDB_Director'].value_counts().head(10)

plt.figure(figsize=(10, 5))

douban_top_directors.plot(kind='bar', alpha=0.7, label='Douban', color='blue')

plt.title('Top 10 Douban Directors')

plt.xlabel('Director')

plt.ylabel('Movie Count')

plt.show()

plt.figure(figsize=(10, 5))

imdb_top_directors.plot(kind='bar', alpha=0.7, label='IMDb', color='orange')

plt.title('Top 10 IMDb Directors')

plt.xlabel('Director')

plt.ylabel('Movie Count')

plt.show()

# Save overlapping movies to a CSV file

overlap_movies.to_csv('overlap_movies.csv', index=False)

# Print results

print(f"豆瓣平均评分: {douban_avg_score}")

print(f"IMDb平均评分: {imdb_avg_score}")

print(f"重叠电影数量: {len(overlap_movies)}")

豆瓣平均评分: 8.9396

IMDb平均评分: 8.254

重叠电影数量: 0